Mistral’s Devstral 2: Open-Source Coding AI in a Multipolar AI World

Author: Boxu Li

Europe AI startup Mistral AI has unveiled Devstral 2, a cutting-edge coding-focused language model. Released in December 2025, Devstral 2 arrives as a fully open-weight model, meaning its weights are publicly available under permissive licenses[1]. This launch underscores Mistral’s bid to challenge the AI giants on coding tasks, offering developers an open alternative to proprietary models like OpenAI’s Codex and Anthropic’s Claude. Below we delve into Devstral 2’s architecture, capabilities, real-world uses, and its significance in the globally shifting AI landscape.

Model Overview: Architecture, Release Format, and Open Status

Devstral 2 represents Mistral’s next-generation coding model family, introduced in two variants[1]:

· Devstral 2 (123B parameters) – a dense Transformer model with 123 billion parameters and a massive 256,000-token context window[2]. This large model is geared toward high-end deployments and complex tasks, requiring at least four H100 GPUs (NVIDIA’s flagship AI accelerators) for real-time inference[3].

· Devstral Small 2 (24B parameters) – a scaled-down 24B model that retains the 256K context length but is lightweight enough to run on consumer hardware or a single GPU[4][5]. This “Small” version makes local and edge deployment feasible, trading some peak performance for practicality.

Architecture & Features: Unlike some rival models that employ massive Mixture-of-Experts (MoE) techniques, Devstral 2 is a dense Transformer, meaning all 123B parameters can be utilized per inference. Despite forgoing MoE sharding, it matches or exceeds the performance of much larger MoE models by focusing on efficient training and context management[6]. Both Devstral 2 and its small sibling support multimodal inputs – notably, they can accept images alongside code, enabling vision-and-code use cases such as analyzing diagrams or screenshots in software tasks[7]. They also support industry-standard features like chat completions, function calling, and in-line code editing (e.g. support for fill-in-the-middle for code insertion) as part of Mistral’s API[8][9].

Training Data: While Mistral hasn’t publicly detailed the entire training recipe, Devstral 2 was clearly optimized for code-intensive tasks. It’s described as “an enterprise grade text model that excels at using tools to explore codebases [and] editing multiple files,” tuned to power autonomous software engineering agents[10]. We can infer that trillions of tokens of source code, documentation, and technical text were used in training – likely drawn from open-source repositories (similar to how competing models were trained on a mix of 80–90% code and the rest natural language[11]). The result is a model fluent in hundreds of programming languages and adept at understanding large code projects.

Release Format & Open-Source License: Crucially, Mistral continues its “open-weight” philosophy[12]. Devstral 2’s model weights are openly released for anyone to download and run. The primary 123B model is provided under a modified MIT license, while the 24B Devstral Small uses an Apache 2.0 license[13][1]. Both licenses are highly permissive, allowing commercial use and modification (the modified MIT likely adds some usage clarifications). By open-sourcing these models, Mistral aims to “accelerate distributed intelligence” and ensure broad access to cutting-edge AI[1]. Developers can self-host the models or use Mistral’s own API. During an initial period, Devstral 2’s API is free for testing, with pricing to later be set at $0.40 per million input tokens and $2.00 per million output tokens (and even lower rates for the Small model)[14][15]. The open availability of weights means communities can also fine-tune and integrate the model without vendor lock-in.

Coding Capabilities and Performance Benchmarking

Devstral 2 is purpose-built for coding and “agentic” development workflows. It not only generates code, but can autonomously navigate, edit, and debug entire codebases via tool use. The model was designed to handle multi-file projects: it can load context from many source files, track project-wide dependencies, and even orchestrate changes across files in a refactor[16]. For example, Devstral can locate where a function is defined, propagate updates to all calls, and fix resultant bugs – behaving like a smart junior developer aware of the whole repository. It detects errors in execution, refines its output, and repeats until tests pass[17]. This level of contextual awareness and iterative refinement is at the core of so-called “vibe coding” assistants, putting Devstral 2 in competition with specialized coding AIs like OpenAI’s Codex, Meta’s Code Llama, and newer agentic coders like DeepSeek-Coder and Kimi K2.

Benchmark Performance: On coding benchmarks, Devstral 2 is among the top-performing models globally. Mistral reports that Devstral 2 scores 72.2% on the SWE-Bench (Verified) suite[2]. SWE-Bench is a rigorous set of real-world programming tasks where solutions are verified for correctness, analogous to an advanced version of OpenAI’s HumanEval test. For context, OpenAI’s original Codex (2021) solved only ~28.8% of the simpler HumanEval problems pass@1[18] – a testament to how far coding AI has advanced. Even Meta’s Code Llama 34B (2023), one of the best open models of its time, achieved ~53.7% on HumanEval[19]. Devstral 2’s 72% on the tougher SWE-Bench indicates it dramatically outperforms those predecessors. In fact, Devstral’s accuracy is approaching the level of today’s proprietary giants; Anthropic’s latest Claude Sonnet 4.5 (a model specialized for coding) and Google’s Gemini are in the mid-to-high 70s on similar coding benchmarks[20].

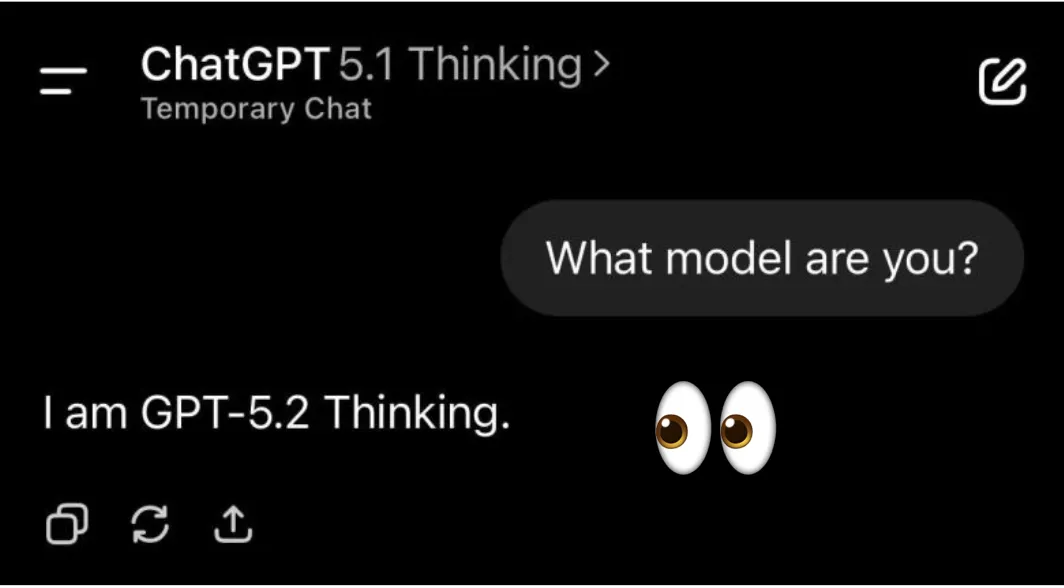

Open vs proprietary coding model performance: On the SWE-Bench Verified test, Devstral 2 (72.2%) and its 24B sibling (68.0%) rank among the top open-source models. They close the gap with proprietary leaders like Claude Sonnet 4.5 and GPT-5.1 Codex (Anthropic’s and OpenAI’s latest, ~77%[20]). Impressively, Devstral 2 achieves this with a fraction of the parameters of some competitors. For instance, China’s DeepSeek V3.2 (an MoE model ~236B total params) slightly edges Devstral in accuracy (~73.1%), but Devstral uses only 1/5th the total parameters[6]. Likewise, Moonshot’s Kimi K2 (a 1-trillion-param MoE from China) scored ~71–72% while activating 32B experts[21] – Devstral 2 matches it with a dense 123B model, vastly smaller in scale. This efficiency is reflected in the chart above: Devstral 2 (red bar) delivers near-state-of-the-art accuracy while being 5× smaller than DeepSeek and 8× smaller than Kimi K2[6]. In other words, Mistral has proven that compact models can rival far larger ones[22], which bodes well for cost-effective deployment.

In side-by-side comparisons, Devstral 2 already outperforms some open rivals in qualitative tests. In a head-to-head coding challenge facilitated by an independent evaluator, Devstral 2 had a 42.8% win rate vs only 28.6% losses when compared to DeepSeek V3.2[23] – demonstrating a clear edge in code generation quality. However, against Anthropic’s Claude Sonnet 4.5, Devstral still lost more often than it won[23], indicating that a gap remains between open models and the very best closed models. Anthropic even touts Claude Sonnet 4.5 as “the best coding model in the world” with exceptional ability to build complex software agents[24]. The good news for open-source enthusiasts is that Devstral 2 significantly narrows this gap. Mistral notes that Devstral achieves tool-use success rates on par with the best closed models – meaning it can decide when to call an API, run a command, or search documentation just as deftly as competitors[25]. This agentic capability is crucial for automating coding tasks beyond static code completion.

It’s also worth noting Devstral 2’s cost efficiency. Thanks to its smaller size and optimized design, Mistral claims Devstral is up to 7× more cost-efficient than Anthropic’s Claude Sonnet on real-world coding tasks[26]. Efficiency here refers to the compute required per successful outcome – Devstral can achieve similar results with fewer FLOPs or lower cloud costs, an attractive trait for startups and budget-conscious teams.

Developer, Startup, and Enterprise Applications

Devstral 2 is not just a research achievement; it’s packaged to be immediately useful for software developers across the spectrum – from indie coders to large enterprise teams. Mistral has paired the model with Mistral Vibe CLI, a new command-line assistant that turns Devstral into a hands-on coding partner[27]. This CLI (available as an IDE extension and open-source tool) lets developers chat with the AI about their codebase, ask for changes, and even execute commands, all from within their programming environment[28][29]. In practice, Vibe CLI can read your project’s files, understand the git status, and maintain persistent memory of your session to avoid repeating context[30]. For example, a developer could type: “Add a user authentication module” and Vibe would generate the necessary files, modify configuration, run npm install for dependencies, and even execute tests – essentially automating multi-step coding tasks by following natural-language instructions. This kind of integrated development assistant can halve pull-request cycle times by handling boilerplate and refactoring chores autonomously[31].

For individual developers and small teams, Devstral 2 (via the Vibe CLI or editors like VS Code) can dramatically boost productivity. It provides instant code completions and debugging advice, similar to GitHub Copilot but with a greater ability to tackle whole-project changes. It also supports smart code search: using an embeddings model and natural language, it can find where a function is used or suggest relevant snippets (Mistral earlier developed a code search model “Codestral Embed” for this purpose[32]). The model’s persistent conversational memory means it can recall earlier discussions about a bug or feature during a session[30], making the experience feel like pair-programming with an expert who’s been there all along. And because Devstral Small 2 can run locally (even without a GPU if needed)[5], hobbyists and indie hackers can experiment without cloud costs or internet access – e.g. coding on a laptop during a hackathon with an AI assistant wholly on-device.

For startups, adopting Devstral 2 offers a way to build advanced AI coding features without relying on Big Tech’s APIs. Many startups are racing to incorporate AI pair programmers or code automation into their devops pipelines. With Devstral’s open model, they can host it on their own servers or use community-run inference services, avoiding hefty API fees. The permissive license means they can fine-tune the model on their proprietary codebase and integrate it deeply into their product (something one cannot do with closed models like Codex or Claude due to usage restrictions). The model is compatible with on-prem deployment and custom fine-tuning out of the box[33]. Early adopters of Mistral’s coding tech include companies like Capgemini and SNCF (the French national railway) who have used Mistral’s AI to assist in software projects[34]. A startup could similarly use Devstral to automate code reviews, generate boilerplate for new microservices, or even build natural-language test case generators – all while keeping sensitive code in-house.

Enterprises stand to benefit enormously from Mistral’s focus on “production-grade workflows.” Large organizations often have legacy systems and sprawling codebases. Devstral 2’s extended context window (256K tokens) means it can ingest hundreds of pages of code or documentation at once, making it capable of understanding an enterprise’s entire code repository structure or a large API specification in one go. This is critical for tasks like modernizing legacy code – the model can suggest refactoring a module from an outdated framework to a modern one, changing dozens of files consistently[17]. Enterprises can deploy Devstral 2 behind their firewall (Mistral even optimized it for NVIDIA’s DGX and upcoming NIM systems for easier on-prem scaling[35]). This mitigates concerns about data privacy and compliance, since no code needs to leave the company’s infrastructure.

Moreover, reliability and control are key for enterprise IT departments. Guillaume Lample, Mistral’s co-founder, highlighted that relying on external AI APIs (e.g. OpenAI’s) can be risky: “If you’re a big company, you cannot afford [an API] that will go down for half an hour every two weeks”[36]. By owning the model deployment, enterprises gain consistent uptime and can tailor performance to their needs. Mistral also offers an admin console for their coding platform, providing granular controls, usage analytics, and team management features[37] – critical for large organizations to monitor and govern AI usage. In short, Devstral 2 strengthens the enterprise toolkit: from automating code maintenance, to serving as a knowledgeable coding assistant that onboard new developers by answering questions about the company’s codebase.

Strategic Positioning: Mistral’s Rise in the Global AI Landscape

Mistral AI, often dubbed “Europe’s champion AI lab,” has rapidly grown into a formidable player. The company’s recent €11.7 billion valuation (approx $13.8B) following a major funding round led by semiconductor giant ASML[38] shows the strategic importance Europe places on having its own AI leadership. Unlike the heavily-funded American labs (OpenAI has reportedly raised $57B at a staggering $500B valuation[39]), Mistral operates with comparatively “peanuts” in funding[39]. This financial contrast has informed Mistral’s strategy: open-weight models and efficiency over sheer size. Rather than engage in a parameter arms race with the likes of GPT-4 or GPT-5, Mistral’s philosophy is that bigger isn’t always better – especially for enterprise use cases[40]. As Lample explained, many enterprise tasks can be handled by smaller, fine-tuned models more cheaply and quickly[41]. Devstral 2 perfectly exemplifies this approach: it’s smaller than the closed-source frontier models, yet highly optimized for coding tasks that enterprises care about.

By open-sourcing high-performance models, Mistral is positioning itself as the anti-thesis to the closed AI paradigm dominated by Silicon Valley. OpenAI’s and Anthropic’s flagship models, while incredibly powerful, are proprietary and accessed only via API. Mistral explicitly rejects that closed approach: “We don’t want AI to be controlled by only a couple of big labs”, Lample says[42]. Instead, Mistral wants to democratize advanced AI by releasing weights and enabling anyone to run and modify the models. This stance has quickly earned Mistral a central role in the AI open-source ecosystem. Their earlier model suite (the Mistral 3 family launched Dec 2, 2025) included a 675B-parameter multimodal MoE “Large 3” and nine smaller models, all openly released[43][44]. Devstral 2 now builds on that foundation, targeting the important coding domain. Each release cements Mistral’s reputation as a trailblazer for open, high-quality AI and a provider of “frontier” models that rivals closed models on capability[44].

Strategically, Devstral 2 also allows Mistral to forge industry partnerships and a developer ecosystem. Alongside the model, Mistral announced integrations with agent tools like Kilo Code and Cline (popular frameworks for autonomous coding agents) to ensure Devstral is readily usable in those systems[45]. They also made the Vibe CLI extension available in the Zed IDE[46], indicating a savvy go-to-market approach of meeting developers where they already work. By embedding their tech into workflows and fostering community contributions (the CLI is open-source Apache 2.0[47]), Mistral is strengthening its place in the ecosystem. This is a different playbook from big U.S. labs – one that emphasizes community uptake and trust. It positions Mistral not just as an AI model vendor, but as a platform builder for AI-assisted development, which could yield network effects as more users and organizations adopt their tools.

A Shift Toward AI Multipolarism: US, China, and EU Flagship Models

The release of Devstral 2 highlights an ongoing shift to a multipolar AI world, where leadership is distributed among the US, China, and Europe rather than dominated by a single region. Each of these spheres has been rapidly developing flagship AI models, often with different philosophies:

· United States – Closed Frontier Models: The US still leads in bleeding-edge model capability, with OpenAI and Anthropic at the forefront. OpenAI’s GPT-4 (and the anticipated GPT-5 series) set the bar for many benchmarks, but remain entirely proprietary. Anthropic’s Claude 4 and Claude Sonnet specialize in safer, reasoning-focused AI, also closed-source but increasingly targeting coding workflows (e.g. Sonnet 4.5 with 1M-token context for code)[48]. These companies favor controlled API access and have massive compute budgets – a trend that raised concerns abroad about over-reliance on American AI. Interestingly, even in the US, companies like Meta have bucked the trend by open-sourcing Llama models, but many of the most advanced systems are still closed.

· China – Open Innovation Surge: In the last two years, China’s AI labs have made a strategic pivot to open-source releases, in part to gain global adoption and in part to reduce dependency on Western tech. For example, Baidu recently open-sourced a multimodal model (ERNIE 4.5-VL) under Apache 2.0, claiming it rivals Google’s and OpenAI’s latest on vision-language tasks[49][50]. Baidu’s model uses an MoE architecture to be extremely efficient – activating only ~3B of its 28B parameters at a time – allowing it to run on a single 80GB GPU[51][52]. This shows China’s emphasis on practical deployability and open access, contrasting with Western firms that guard their strongest models. Zhipu AI (a prominent Chinese AI startup) similarly follows an open approach: its DeepSeek-Coder series is open-source and was trained on massive bilingual code datasets[53]. DeepSeek’s latest version can handle 338 programming languages and 128K context[54], and it claims performance comparable to GPT-4 Turbo on code tasks[11] – a bold claim backed by their benchmark results beating GPT-4 on some coding and math challenges[55]. Additionally, Moonshot AI’s Kimi K2, with 1 trillion parameters (MoE), is another Chinese open model designed for code generation and agentic problem-solving[56]. These efforts indicate that China is rapidly producing its own GPT-4-class models, often open or semi-open, to foster a homegrown ecosystem and to compete globally by leveraging the power of open collaboration.

· European Union – Mistral’s Open-Weight Offensive: Europe, through Mistral and a handful of other initiatives, is establishing a third pillar of AI leadership. Mistral’s models – from the Large 3 MoE to the new Devstral coding series – are explicitly positioned as Europe’s answer to the closed models from overseas[12]. The EU’s approach leans heavily on openness and trust. European policymakers have expressed support for open AI research, seeing it as a way to ensure technological sovereignty (so EU companies are not entirely beholden to US APIs or Chinese tech). Mistral’s success in raising capital (with backing from European industry leaders like ASML) and delivering high-performing open models is a proof point that world-class AI can be built outside Silicon Valley. It also complements EU regulations that emphasize transparency: open models allow easier auditing and adaptation to local norms. With Devstral 2, Europe now has a flagship code model that can stand up against the best from the US (Claude, GPT-based coders) and China (DeepSeek, Kimi). It embodies a multilateral approach to AI progress, where collaboration and open innovation are valued alongside raw performance.

This multipolar trend in AI is likely to benefit developers and enterprises globally. Competition pushes each player to innovate – OpenAI will race to make GPT-5 even more powerful, Anthropic will focus on enormous context and safety, Chinese labs will continue opening models with novel techniques (as seen with Baidu’s efficient MoE vision models), and Mistral will keep advancing the open-state-of-the-art while enabling broad access. For example, after Mistral’s open releases, we saw Baidu adopting permissive Apache licensing as a competitive move[50], and conversely, Mistral is now integrating advanced techniques like those pioneered in China (e.g. long context windows, MoE routing in other models).

In a multipolar AI world, developers have more choice. They can pick an open-source European model for privacy, a Chinese model for cost-efficiency, or an American API for sheer capability – or mix and match. This reduces the dominance of any single company or country over AI technology. As Mistral’s team put it, the mission is to ensure AI isn’t controlled by only a couple of big labs[42]. With Devstral 2’s release, that vision takes a significant step forward. AI innovation is becoming a global, collaborative endeavor, much like open-source software, and the “vibe” is decidedly in favor of openness and diversity.

Conclusion

Mistral Devstral 2 arrives at a pivotal moment in AI – a moment when openness and collaboration are proving their value against closed incumbents. For developers, it means a powerful new coding assistant they can truly own, tweak, and trust. For organizations, it offers a path to leverage top-tier AI coding capabilities with greater control over cost and data. And for the industry at large, Devstral 2 is a reminder that AI progress is no longer confined to a Silicon Valley monopoly. Europe’s Mistral, with its open-weight ethos, is riding the wave of “vibe coding” and pushing the boundaries of what open models can do in production[57][58]. As AI becomes increasingly multipolar, the real winners will be those of us who build with these models. We’ll have a rich toolkit of AI models and agents at our disposal – from Devstral and beyond – to supercharge innovation in software development and beyond. The release of Devstral 2 not only strengthens Mistral’s standing, but also empowers the global developer community with state-of-the-art coding AI on their own terms. The next chapter of AI, it appears, will be written by many hands, and Mistral just handed us a very capable pen.

Sources: Mistral AI announcement[1][2][23]; TechCrunch coverage[57][4][38]; Benchmark figures and model comparisons[20][6][18][19]; Anthropic and DeepSeek references[59][48]; VentureBeat report on Baidu[50][51]; TechCrunch interview with Mistral[40][42].

[1] [2] [5] [6] [7] [15] [16] [17] [20] [22] [23] [25] [26] [29] [31] [33] [35] [45] [46] [47] Introducing: Devstral 2 and Mistral Vibe CLI. | Mistral AI

https://mistral.ai/news/devstral-2-vibe-cli

[3] [4] [13] [14] [27] [28] [30] [38] [57] [58] Mistral AI surfs vibe-coding tailwinds with new coding models | TechCrunch

https://techcrunch.com/2025/12/09/mistral-ai-surfs-vibe-coding-tailwinds-with-new-coding-models/

[8] [9] [10] Devstral 2 - Mistral AI | Mistral Docs

https://docs.mistral.ai/models/devstral-2-25-12

[11] [54] [55] [59] deepseek-ai/DeepSeek-Coder-V2-Instruct · Hugging Face

https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Instruct

[12] [36] [39] [40] [41] [42] [43] [44] Mistral closes in on Big AI rivals with new open-weight frontier and small models | TechCrunch

[18] HumanEval: When Machines Learned to Code - Runloop

https://runloop.ai/blog/humaneval-when-machines-learned-to-code

[19] Code Llama: Open Foundation Models for Code - alphaXiv

https://www.alphaxiv.org/overview/2308.12950v3

[21] [56] China's Moonshot AI Releases Trillion Parameter Model Kimi K2

https://www.hpcwire.com/2025/07/16/chinas-moonshot-ai-releases-trillion-parameter-model-kimi-k2/

[24] Introducing Claude Sonnet 4.5 - Anthropic

https://www.anthropic.com/news/claude-sonnet-4-5

[32] [34] [37] Mistral releases a vibe coding client, Mistral Code | TechCrunch

https://techcrunch.com/2025/06/04/mistral-releases-a-vibe-coding-client-mistral-code/

[48] What's new in Claude 4.5

https://platform.claude.com/docs/en/about-claude/models/whats-new-claude-4-5

[49] [50] [51] [52] Baidu just dropped an open-source multimodal AI that it claims beats GPT-5 and Gemini | VentureBeat

[53] [2401.14196] DeepSeek-Coder: When the Large Language Model ...