DeepSeek V4 Release Date: Latest Timeline and Pre-Launch Checklist

Hey fellow AI tinkerers — if you've been stress-testing coding assistants inside real projects, you've probably noticed: most models still break when you throw an entire codebase at them.

I've been tracking DeepSeek's V4 development for weeks now, not because I enjoy release date speculation, but because the architectural signals coming out of their research papers suggest this might actually change how we work with AI coding tools. Not "demo well" — actually work in production.

The first thing I asked myself when I saw the leaked benchmarks: "Can this model handle a real refactoring task without hallucinating imports?" Because if it can't survive that test, the rest doesn't matter.

Here's what we know so far, based on technical publications and industry reports from The Information and Reuters.

Current Release Timeline

What DeepSeek Has Confirmed

DeepSeek hasn't issued an official release date announcement. They've maintained operational silence on V4's launch timing, which is consistent with their pattern from the R1 release.

What they have published are research papers that industry observers immediately connected to V4's architecture:

- January 1, 2026: Published Manifold-Constrained Hyper-Connections (mHC) paper on arXiv, co-authored by founder Liang Wenfeng

- January 13, 2026: Released Engram conditional memory system research (arXiv:2601.07372)

- January 4, 2026: Updated R1 technical documentation from 22 to 86 pages, revealing full training pipelines

These aren't random papers. They're architectural blueprints.

Industry Reports (The Information, Reuters)

According to The Information, citing sources with direct knowledge of the project, DeepSeek plans to release V4 around February 17, 2026, coinciding with Lunar New Year. Reuters reported the timeline but noted they couldn't independently verify it.

The timing mirrors DeepSeek's R1 launch strategy, which was released just before the 2025 Lunar New Year and triggered a $1 trillion tech stock selloff.

I'll be honest — this confused me at first. Why announce through research papers instead of a press release? Then I realized: DeepSeek is targeting developers, not investors. If you're reading mHC papers at 2am, you're already their user.

Why the February Timing Matters

This isn't just about holiday marketing. There's a strategic logic here that became clear when I mapped out DeepSeek's previous releases:

Pattern Recognition:

- R1 launched January 20, 2025, one week before Chinese New Year

- V3 launched December 2024

- V4 targeting mid-February 2026, again near Lunar New Year

DeepSeek leverages the Lunar New Year period for maximum visibility in both Chinese and international markets. It's a cultural moment when tech media attention is high, and developers have downtime to experiment with new tools.

But here's the part that really caught my attention: internal testing by DeepSeek employees reportedly shows V4 outperforming Anthropic's Claude 3.5 Sonnet and OpenAI's GPT-4o in coding tasks.

I'm skeptical of internal benchmarks. They always look good. But the architectural papers backing this claim are real, peer-reviewed, and technically sound. That's what makes this interesting.

The efficiency angle is what I keep coming back to. DeepSeek Sparse Attention enables context windows exceeding 1 million tokens while reducing computational costs by approximately 50% compared to standard attention mechanisms. If that holds up in production, it changes the economics of running AI coding assistants.

Pre-Launch Checklist for Developers

I've been preparing my workflow for V4's arrival, not because I'm convinced it'll be perfect, but because I want to test it the moment it drops. Here's my actual checklist:

1. Benchmark Your Current Setup

Before V4 launches, document your existing workflow performance:

# Track current model performance

import time

def benchmark_coding_task(model, codebase_path):

start = time.time()

result = model.analyze_codebase(codebase_path)

elapsed = time.time() - start

return {

'model': model.name,

'time': elapsed,

'tokens_used': result.token_count,

'accuracy': evaluate_output(result)

}

# Run this with your current model (Claude, GPT, etc.)

baseline = benchmark_coding_task(current_model, "./your_project")

Why this matters: You need a baseline to know if V4 is actually better for your use case. Demo benchmarks don't tell you if it'll work on your messy legacy code.

2. Prepare Test Cases

I've built a set of real refactoring tasks that consistently break most models:

- Multi-file dependency tracing: Can it follow imports across 20+ files?

- Legacy code modernization: Does it understand context from outdated patterns?

- Bug reproduction: Can it trace a stack trace back to the actual bug source?

Document your own test cases now. When V4 launches, you'll want to run these immediately.

3. Set Up Infrastructure

V4 is designed to run on consumer-grade hardware: dual NVIDIA RTX 4090s or a single RTX 5090 for consumer tier.

Check your deployment options:

If you're planning local deployment, prepare your Ollama or vLLM instances for the update. DeepSeek typically releases with open weights.

4. Review Architectural Changes

The two major innovations in V4 are worth understanding before launch:

mHC (Manifold-Constrained Hyper-Connections): Enables stable training at scale by maintaining residual connection properties, preventing gradient explosions that typically occur beyond 60 layers. In tests on 3B, 9B, and 27B parameter models, mHC-powered LLMs performed better across eight different AI benchmarks with only 6.27% hardware overhead.

Engram Conditional Memory: Separates static memory retrieval from dynamic neural computation using deterministic hash-based lookups (O(1) complexity) for static patterns while reserving computational resources for genuine reasoning tasks.

What this means in practice: V4 should be able to remember your codebase patterns without wasting compute recalculating them every time. That's the theory. We'll see if it holds up.

5. Define Success Metrics

I'm tracking these specific metrics for V4 evaluation:

## V4 Evaluation Framework

### Performance

- [ ] Context window: Can it actually process 1M+ tokens?

- [ ] Latency: Time-to-first-token for complex queries

- [ ] Consistency: Same query, same result across sessions

### Accuracy

- [ ] Bug detection rate on known issues

- [ ] False positive rate in code suggestions

- [ ] Understanding of project-specific conventions

### Cost

- [ ] Inference cost per 1K tokens

- [ ] Cost vs. Claude/GPT for equivalent tasks

- [ ] Local deployment viability for team size

### Integration

- [ ] API compatibility with existing tools

- [ ] Context management for long sessions

- [ ] Error handling and recovery

The key question I keep asking: Does this reduce time-to-working-code, or just time-to-generated-code? Those are very different things.

6. Monitor Release Channels

Where to watch for the actual launch:

- Official arXiv: New technical papers often precede releases by days

- Hugging Face: Model weights typically appear here first

- DeepSeek GitHub: Official repositories for inference code

- The Information: They broke the V4 story and likely have insider sources

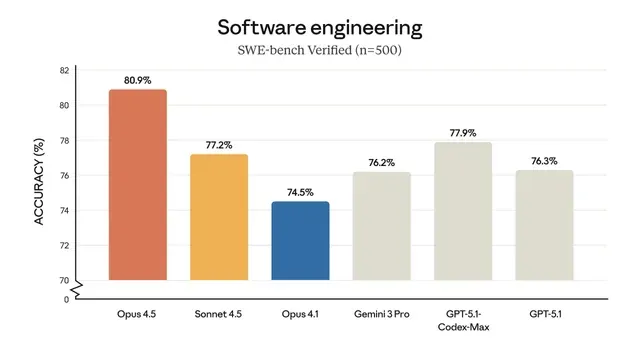

I'm also monitoring the SWE-bench leaderboard, where Claude Opus 4.5 currently leads with an 80.9% solve rate. If V4 appears there above 80%, that's a legitimate signal.

We'll Update This Post at Launch

I'm tracking three critical signals that will confirm V4's actual release:

- Model weights on Hugging Face: The definitive release confirmation

- API endpoint availability: Cloud access typically follows within 24-48 hours

- Independent benchmark results: Real testing, not internal claims

When V4 launches, I'll run my full test suite and publish results here. Not speculation — actual performance data on real coding tasks.

The waiting game is the hardest part. You've built workflows around current models. You've learned their quirks. Now there's this promise of something better.

At Macaron, we built a personal AI that remembers what you care about and turns one-sentence requests into actual tools. I've been using it to organize my V4 evaluation plan — asked it to create a test scenario tracker, and it generated a mini-app where I can list cases, tag priorities, and check them off as I run them. If you need to keep your thoughts organized without opening another productivity app while you wait for V4, try creating your own tracker at macaron.im. One sentence, see what it builds, judge if it's useful.

Until February, keep your test cases ready and your skepticism healthy. The best way to evaluate V4 won't be reading benchmarks — it'll be running your messiest codebase through it and seeing what breaks.