Prep Macaron Workflows for GLM-5: Agent Templates (Pre-Release)

While everyone else is obsessing over betting markets and rumored benchmarks for the upcoming GLM-5 release, I’m focused on something far less sexy but infinitely more critical: stability. Let’s be real—if your AI agents are tightly coupled to a specific model’s quirks, every upgrade is a migration nightmare.

I refuse to let a model update break my daily operations again. That’s why I’ve spent the last few weeks inside Macaron designing "schema-first" workflows that can absorb GLM-5—or any new model—without a full rebuild. Whether it’s rumored updates in agentic capabilities or creative writing, the goal is simple: when the new API drops, I want to change one parameter and keep moving. Here is the exact setup I’m using to make that happen.

Why "pre-release workflow prep" saves time (and avoids messy migrations)

Let's be real — AI models leapfrog each other constantly. GLM-4.7 just dropped in January with improvements in agentic coding. GLM-5 is coming in weeks with (allegedly) AGI-level reasoning. If your workflows are tightly coupled to a specific model's quirks, every upgrade becomes a migration project.

I learned this the hard way with the GLM-4.6 to 4.7 transition. I had prompts that assumed a certain refusal behavior, tool-calling patterns that broke with the new "Preserved Thinking" mode, and no fallback when the API got weird during the rollout. It took me three days to stabilize things that should have been a one-line model swap.

The fix isn't to predict what GLM-5 will do. It's to build modular workflows that treat the model as a swappable component. Here's what that actually means:

- Event-driven structure: Instead of hardcoding "GLM-4.7 does X," build agents that receive tasks, execute steps, and validate outputs against a schema. The model is just the execution layer.

- Schema-first outputs: Define what you need back (JSON, markdown, structured data) before you write the prompt. This way, when you swap models, you're testing against the same contract.

- Graceful degradation: Always have a fallback. If GLM-5 hallucinates or refuses unexpectedly, your workflow should route to GLM-4.7 or flag for manual review — not crash.

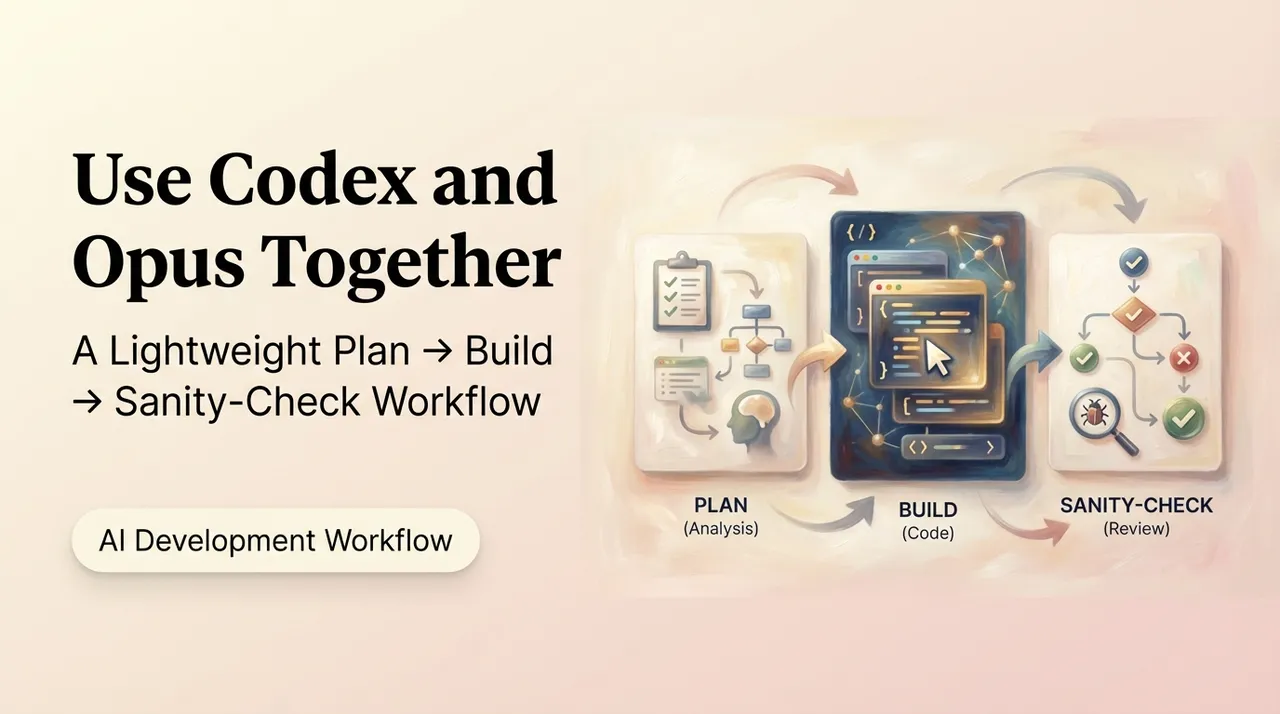

The pattern I've settled on is this: Define the workflow as a chain of agents (Reading → Processing → Action), standardize the outputs, and keep the model calls isolated. When GLM-5 drops, I change one API parameter. That's it.

This approach saved me probably 10-15 hours during the last upgrade. It'll save more when GLM-5 lands because the rumored changes (deeper reasoning, better tool orchestration) could break prompts that rely on current behavior.

Template 1 — Reading → Notes → Next Actions (for articles, docs, research)

I use this workflow constantly: read something (article, PDF, transcript), extract the key points, generate structured notes, and figure out what to do next. It's not glamorous, but it's probably 40% of my LLM usage.

Here's how I've structured it to be model-agnostic:

Step 1: Reading agent — Takes raw content (text, URL, or uploaded doc) and produces a summary. Right now, this is where I'd use GLM-5's rumored creative writing upgrades — better coherence, less repetition. The prompt is simple: "Summarize this in 3-5 sentences. Focus on [user context]."

Step 2: Notes agent — Extracts key points, quotes, and tags. This step enforces a schema (more on that below) so the output is always structured, even if the model changes.

Step 3: Actions agent — Suggests next steps based on the notes. This is where agentic capabilities matter. With GLM-4.7's "Preserved Thinking", it maintains context across turns. GLM-5 should improve this with deeper reasoning, making multi-step planning more reliable.

The chain works because each agent has a clear input/output contract. When I tested this with different models (Claude, GPT-4, GLM-4.7), the only thing I changed was the model ID. The workflow stayed intact.

Output schema you can reuse across models

This is the critical part. If your outputs are unstructured text, you're rebuilding the workflow every time you switch models. Here's the schema I use for the Reading → Notes → Actions template:

{

"summary": "string (concise overview)",

"notes": [

{

"key_point": "string",

"quote": "string (optional)",

"tags": ["array of strings"]

}

],

"next_actions": [

{

"action": "string",

"priority": "high | medium | low",

"due_date": "YYYY-MM-DD (optional)"

}

]

}

Why this works:

- Model-agnostic: Any model that can follow instructions can fill this. I've tested it with GLM-4.7, Claude Sonnet, and GPT-4 — same schema, different models.

- Validates easily: I run a simple check (Do the keys exist? Are the arrays populated?) before passing the output downstream. If validation fails, I retry with a clearer prompt or fallback to a simpler model.

- Extensible: Need to add "confidence scores" or "related sources"? Just add fields. The core structure doesn't break.

The first time I used this schema, I was skeptical. It felt overly rigid. But the moment I swapped from GPT-4 to GLM-4.7 mid-project and nothing broke, I got it. The rigidity is the point — it absorbs model variance.

Template 2 — Weekly planning with constraints (time, energy, priorities)

This one's more personal, but it's where I've seen the biggest gains from agentic models. I use it to plan my week with real constraints: limited time blocks, energy levels (I'm useless after 6 PM), and shifting priorities.

The workflow:

Planning agent — Takes a list of tasks and constraints (e.g., "3 hours available Monday AM, low energy Thursday PM") and builds a schedule. This is where GLM-5's reasoning improvements could shine — better constraint optimization, fewer conflicts.

Constraint agent — Validates that the plan respects the rules (no deep work in low-energy slots, no back-to-back meetings). Right now, GLM-4.7 occasionally ignores edge cases (like forgetting travel time between meetings). I'm hoping GLM-5's deeper reasoning fixes this.

Execution agent — Tracks progress and adjusts mid-week. This is where "Preserved Thinking" matters — the agent needs to remember why it scheduled things a certain way.

The hard part isn't the planning logic. It's making the plan adaptive. If I finish a task early or get derailed by something urgent, the system should adjust without requiring me to re-prompt from scratch. GLM-4.7 can do this in short sessions, but it drifts after 5-6 turns. I'm testing whether GLM-5's longer context handling (rumored 400K+ tokens) keeps the plan coherent over a full week.

One thing I've learned: Don't automate the final decision. The agent suggests, I approve. Always. Automation that removes human judgment in planning workflows creates more problems than it solves (more on safety rails below).

Template 3 — Inbox triage (prioritize + draft responses + follow-ups)

This is the workflow I'm most cautious about, because email is high-stakes. But it's also where LLMs can save the most time if you get it right.

The chain:

Triage agent — Scans inbox, prioritizes by urgency/sender, and flags anything that needs immediate attention. I use a simple scoring system (1-5), with rules like "Boss emails = 5, newsletters = 1."

Draft agent — Generates responses for routine emails. This is where GLM-5's creative writing could help — better tone matching, fewer robotic phrases. Right now, I review every draft before sending. Always.

Follow-up agent — Tracks conversations that need replies by a certain date and sets reminders. This requires maintaining state across days, which is where GLM-4.7's "Preserved Thinking" helps.

The key to making this work is specificity in the prompts. Generic instructions like "draft a professional reply" produce generic outputs. I use prompts like: "Draft a reply to [sender] confirming [specific detail]. Tone: friendly but concise. Max 3 sentences."

I ran this workflow for two weeks with GLM-4.7. Success rate: about 70% (drafts I could send with minimal edits). The 30% failures were mostly tone issues — too formal, too casual, or missing context from earlier in the thread. I'm testing whether GLM-5's deeper reasoning improves that by better understanding conversational context.

Safety rails (what not to automate; when to require manual review)

Here's where I've drawn hard lines:

Never fully automate:

- Anything involving contracts, legal commitments, or financial decisions

- Responses to sensitive topics (HR issues, complaints, conflicts)

- Emails where tone could cause interpersonal problems

Always flag for review:

- High-stakes emails (to clients, leadership, external partners)

- Ambiguous requests (where the agent's confidence is low)

- Anything involving personal data or private information

I use a simple rule: If I'd regret sending this without reading it, the agent shouldn't send it automatically. In practice, this means 80% of drafts go through review, and only routine confirmations ("Yes, I'll attend the meeting") are auto-sent.

The safety rails aren't about limiting the agent's capabilities. They're about acknowledging that LLMs are probabilistic, and the cost of a bad output in email is high. GLM-5 might reduce the error rate, but it won't eliminate it. So the review step stays.

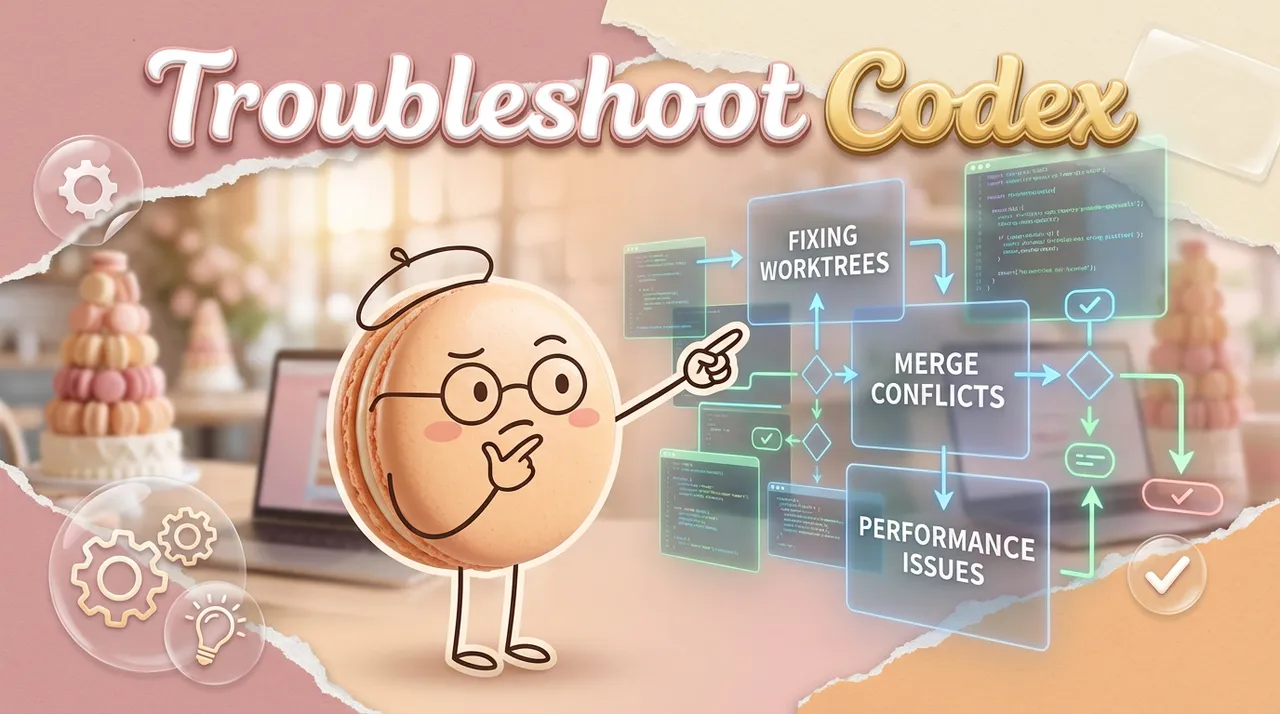

When GLM-5 lands: swap-in rules + rollback playbook

When GLM-5 officially launches (likely mid-February), here's my plan:

Swap-in rules:

- Update the model ID in my API calls (e.g., model="glm-5" instead of model="glm-4.7")

- Run the 20-minute eval set from the GLM-5 vs 4.7 comparison (summarize, extract, plan, tool outputs, refusal behavior)

- A/B test: Route 20% of traffic to GLM-5, keep 80% on GLM-4.7 for the first week

- Monitor for schema breaks, hallucinations, or unexpected refusals

Rollback playbook:

- If GLM-5 fails validation checks (e.g., outputs don't match schema) more than 10% of the time, rollback to GLM-4.7 immediately

- Keep dual-model support for at least two weeks post-launch (use GLM-4.7 as fallback)

- Log every failure with timestamps, prompts, and outputs — this data is critical for debugging

- If there's a major regression (e.g., tool-calling breaks, reasoning drifts), pause the migration and wait for a patch or update

The goal isn't to be first. It's to be stable. I've seen too many rushed migrations where the new model looked great in demos but fell apart under real load. GLM-5 might be amazing on day one, or it might have early bugs (like GLM-4.7 did with refusal behavior). The workflow prep means I can test quickly, but I'm not dependent on the upgrade working perfectly.

Where I am now: Workflows are prepped, schemas are locked in, and I'm running final tests on GLM-4.7 to establish baselines. When GLM-5 drops, I'll swap it in, run the eval, and report back on what actually changed.

This is how I've been working for the last few weeks. If you're in a similar spot — building on LLMs but tired of migration chaos — try standardizing your outputs first. It's not exciting, but it's the difference between a smooth upgrade and three days of debugging.

We’ve shared the exact templates and schemas we rely on for stability. Now, we invite you to implement them inside Macaron. Start building your first resilient agent and experience how easy it is to swap the execution layer when new models drop.

Let me know if GLM-5 breaks anything when it lands. I'll be testing too.