GLM-5 Pricing Watch 2026: Token Budgeting & Cost Control

Is a "cheaper" model actually saving you money if you have to run it three times to get the right answer? That’s the question keeping me up at night as we approach the GLM-5 launch. We saw this with GLM-4.7 FlashX: on paper, it’s a steal. In practice, I’ve seen the "retry tax" on ambiguous tasks erase those savings entirely.

True cost control isn't just about picking the model with the lowest input fees; it’s about understanding failure modes, latency, and the hidden multiplier of "thinking" contexts. Before you route your production traffic to the shiny new GLM-5 endpoints this February, let’s look at the math that determines whether you’re actually optimizing your ROI or just burning tokens on a less capable model.

What typically changes with new-model pricing (tiers, context, "thinking", tools)

Here's the thing about model launches: they're also pricing experiments.

GLM-5 will likely follow the pattern we saw with GLM-4.7 and 4.5 — tiered variants for different use cases. The GLM series has been aggressive here:

- Flash/FlashX for speed (GLM-4.7-FlashX is $0.07/M input, $0.4/M output)

- Air for balanced agentic work

- Flagship for complex reasoning (GLM-4.7 full is $0.6/M input, $2.2/M output)

I like this structure, but here's where it gets messy: each tier has different failure modes. The cheaper models retry more often. That "30% cheaper" FlashX variant? I've seen it need 2-3 retries on complex extractions, which erases the savings.

Context length is the other big shift. GLM-4.5 supports 128K tokens, and there's caching now — up to 90% off for repeated prefixes. Sounds great. But in practice, if you're not deliberately compressing your prompts, you're just burning tokens on unconsumed context. I've caught myself sending 50K context when 5K would've worked.

Then there's "thinking" modes. This is where pricing gets interesting. Models like GLM-4.5 charge extra (maybe 1.5x) when they do chain-of-thought reasoning. Makes sense — more compute. But the toggle isn't always obvious. I've had tasks where thinking mode added 20% to costs without noticeably better outputs.

Tool calls are the wildcard. Each function call adds latency (200-2000ms) and sometimes direct fees ($1.6-5.0 per 1K calls in some APIs). GLM-5 is rumored to have better agentic orchestration, which might mean more tool-heavy workflows. That's powerful, but it's also a cost multiplier if you're not batching smartly.

The pattern I'm watching: GLM series has been undercutting Western models by 20-30%. If that holds for GLM-5, it could be disruptive. But only if the hidden multipliers (retries, context waste, tool spam) don't eat the difference.

Cost drivers checklist (context length, retries, tool calls, caching)

Let me walk through what actually moves the needle in my bills:

Context length is the primary driver. Every token in your prompt costs money, and with 128K+ support, it's easy to accidentally send massive contexts. I've seen bills inflate 2-3x just from untrimmed conversation histories.

What I do now: compress aggressively. Even basic summarization can cut tokens 30-50%. For GLM-5, I'm planning to test whether its rumored context improvements make this less necessary — but I'm not assuming.

Retries create a "token snowball." Each retry is a full API call, and if you're appending the failed response to context, you're doubling costs. Cheaper models like GLM-4.7-FlashX have higher failure rates (I've logged up to 30% on ambiguous tasks). So you save per-call, but lose on volume.

My rule: use validation tools before expensive retries. Run a cheap sanity check first.

Tool calls stack fast. If your agent is making 5 API calls per task (weather, search, calculator, whatever), and each adds 500ms + fees, you're multiplying both latency and cost. GLM-5's agentic focus might optimize this, but I'm watching for whether it increases tool usage by default.

The fix: parallel execution where possible. I've cut tool latency by 50% just by not chaining everything serially.

Caching is the biggest cost lever. GLM-4.5 offers prompt caching — basically, if your system prompt or RAG docs repeat across calls, they're cached and charged at 10-50% of normal rates. I've seen input costs drop 70% just from structuring prompts to reuse prefixes.

Semantic caching (caching similar queries, not just identical ones) can reduce calls by another 70%. But it requires infrastructure. Worth it if you're doing high-volume research or support workflows.

Build a monthly token budget (simple method + guardrails)

Here's how I forecast now, and it's embarrassingly simple:

(Avg tokens per request × Requests per month) × (Input price + Output price)

Example with GLM-4.7 rates:

- 10K requests/month

- 2K input tokens, 500 output tokens per request

- GLM-4.7: $0.6/M input, $2.2/M output

Math:

- Input: (10K × 2K tokens) / 1M × $0.6 = ~$12

- Output: (10K × 500 tokens) / 1M × $2.2 = ~$11

- Total: ~$23/month

Except... that's never what I actually pay.

What I add now:

- 20-50% growth buffer (usage creeps up)

- 10-20% retry tax (failures happen)

- Context waste multiplier (assume 30% inefficiency until proven otherwise)

So that $23 becomes $35-40 in practice.

For GLM-5, I'm budgeting 20-30% under GLM-4.7 rates based on expected efficiency gains. But I'm not moving production traffic until I confirm that in real tasks.

Guardrails I actually use:

- Hard cap at 100% of budget (blocks requests)

- Soft alert at 70% (gives me time to investigate)

- Per-user/team quotas (so one runaway agent doesn't kill everyone)

- Weekly resets, not monthly (catches spikes faster)

The goal isn't precision. It's early warning before something expensive happens. For more on this, check out how to forecast AI services costs in cloud.

Cost-control tactics that preserve quality (summarize, cache, route, cap output)

Okay, real talk: most "cost optimization" advice tells you to use worse models. That's not useful.

Here's what actually works without killing quality:

Summarize before you send. I run a cheap model (or even a local one) to compress documents before feeding them to the expensive model. Cuts tokens 30-50%, and honestly, the expensive model often works better with the compressed version — less noise.

Cache everything you can. System prompts, RAG documents, few-shot examples — anything that repeats should be cached. GLM series offers free cached storage in some tiers. I've seen 70% savings here.

Route strategically. Simple tasks (extraction, formatting, drafts) go to cheap models. Complex reasoning (final decisions, ambiguous queries) goes to the flagship. This saves 50-80% without quality loss, because most tasks don't need the expensive model.

Cap output tokens. Add max_tokens limits and prompt with "be concise." I've reduced verbosity by 20-40% just by asking. Most models over-explain by default.

Batch requests. If you're doing 100 independent tasks, batching can save 50%. But it adds latency, so only works for non-urgent workflows.

What I don't do anymore: trying to optimize prompt wording to save 10 tokens. Not worth the cognitive load. Focus on the big levers first.

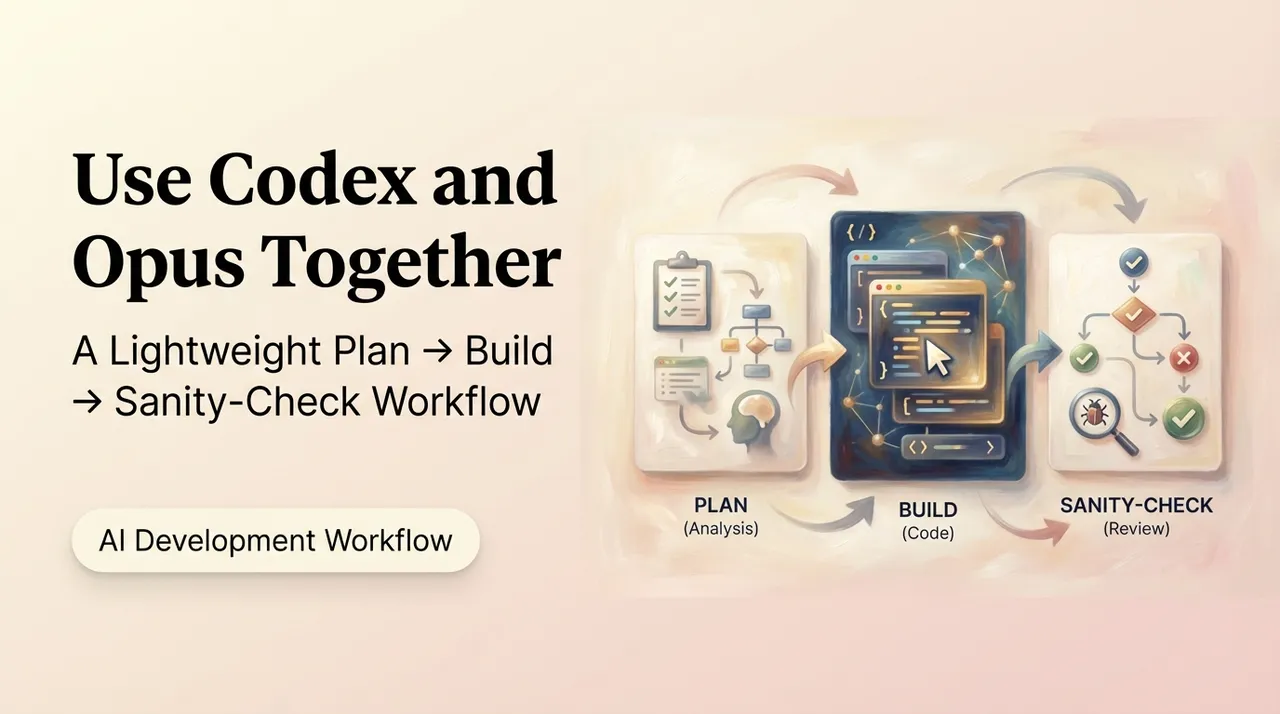

Routing rules for Macaron tasks (cheap model for drafts, strong model for final)

This is where I've landed for our workflows in Macaron:

Drafts, extractions, simple transforms → Cheap model (GLM-4.7-FlashX, $0.07/M)

- Confidence threshold: >90%

- If it fails, retry once, then escalate

- Saves ~70% vs always using flagship

Finals, complex reasoning, ambiguous queries → Strong model (GLM-5 flagship when it drops)

- Anything with low confidence scores

- Anything >200 tokens of reasoning needed

- User-facing outputs where quality matters

Preference-aligned routing: I'm testing policies like "if draft confidence <80%, route to strong model immediately." Avoids the retry tax.

In practice, this cuts costs 45-80% depending on task mix. The key is logging which tasks actually need the strong model, then tuning the thresholds. Don't just guess.

For GLM-5, I'm planning to A/B test this: run 20% of traffic through the new model, compare quality+cost to GLM-4.7 routing, then adjust.

ROI check: time saved vs spend (a quick formula)

Here's the check I run quarterly:

ROI (%) = [(Time Saved × Hourly Rate) - AI Spend] / AI Spend × 100

Example:

- I save ~3 hours/week on research workflows (thanks to agents)

- My time is worth ~$75/hour (conservative)

- That's 240 hours/year = $18K value created

- AI spend: ~$150/month = $1.8K/year

- ROI: ($18K - $1.8K) / $1.8K × 100 = ~900%

Even if my time estimates are off by 50%, the math still works.

But here's the thing: this only matters if the tool actually saves time. If I'm debugging agents or re-running failed tasks, that's negative ROI. So I track:

- Tasks completed without intervention

- Time spent on maintenance/fixes

- Baseline (how long would this take manually?)

For GLM-5, the question isn't "is it cheaper?" — it's "does it reduce total time cost ($$$ + hours) vs the current setup?"

If it's 30% cheaper but requires 20% more babysitting, that's a loss.

FAQ (free tiers, regional pricing differences, best defaults)

Are there free tiers? GLM-4-Flash is free right now on BigModel platform. GLM-5 might offer limited free access (I'd guess 10-50 requests/day for testing). But don't build production on free tiers — they rate-limit hard.

ChatGPT and Gemini have free tiers with 10-15 requests per minute caps. Fine for experiments, not for workflows.

What about regional pricing? This is wild: GLM models in China are priced in RMB at ¥0.1/M ($0.014/M USD). That's 5-10x cheaper than US pricing for equivalent models like GPT-4o ($2.5/M).

If you can route through China-based endpoints (and navigate data residency rules), there's real savings. But you're trading cost for complexity.

EU pricing sometimes has GDPR premiums — haven't seen that with GLM yet, but watch for it.

Best defaults to start with? Start with mini/flash tiers for most tasks. Only escalate when you see quality issues. I default to GLM-4.7-FlashX for 70% of tasks, then route up as needed.

For open-weights models, GLM offers MIT-licensed options you can self-host. Eliminates API costs entirely, but adds infrastructure overhead.

Where I'm at now: I'm running GLM-4.7 in production, with cost controls in place (caching, routing, caps). When GLM-5 drops, I'll A/B test in Macaron workflows — 20% traffic, compare quality+cost for 2 weeks, then decide.

Macaron can't change GLM-5's pricing, but we can help you stop paying for unneeded retries. We invite you to A/B test our routing logic against your current setup. Validate your cost savings with Macaron.

The goal isn't "cheapest possible." It's predictable costs for reliable outputs. If GLM-5 delivers that at 20-30% under current rates, it's a switch. If not, I'll wait for the next iteration.

If you're in a similar spot — running agents, research tools, anything with repeated calls — I'd set up cost tracking now. Because the only way to know if a new model saves money is to have a baseline.

Let me know what you find when GLM-5 lands. I'll be watching the same numbers.