How Developers Use GPT-5.3 Codex as a Coding Agent

Hey fellow agentic coding explorers—if you've been watching the GPT-5.3 Codex rollout and wondering whether "agent mode" actually changes anything beyond the marketing, you're in the right place.

I've spent the last three weeks running GPT-5.3 Codex through real repo work: refactors, terminal loops, multi-file debugging sessions. Not demos. Real tasks that break, hang, or silently drift off-spec if you're not watching closely. My core question wasn't "can it write functions?"—it was: Can this thing stay on task across 50+ tool calls without losing context or making choices I'd need to revert?

That distinction matters. Because the jump from "AI autocomplete" to "autonomous coding agent" isn't about speed or benchmark scores. It's about whether you can delegate an outcome—"make these tests green," "refactor this module safely"—and come back to working code instead of a mess.

Here's what I learned from running it inside actual workflows.

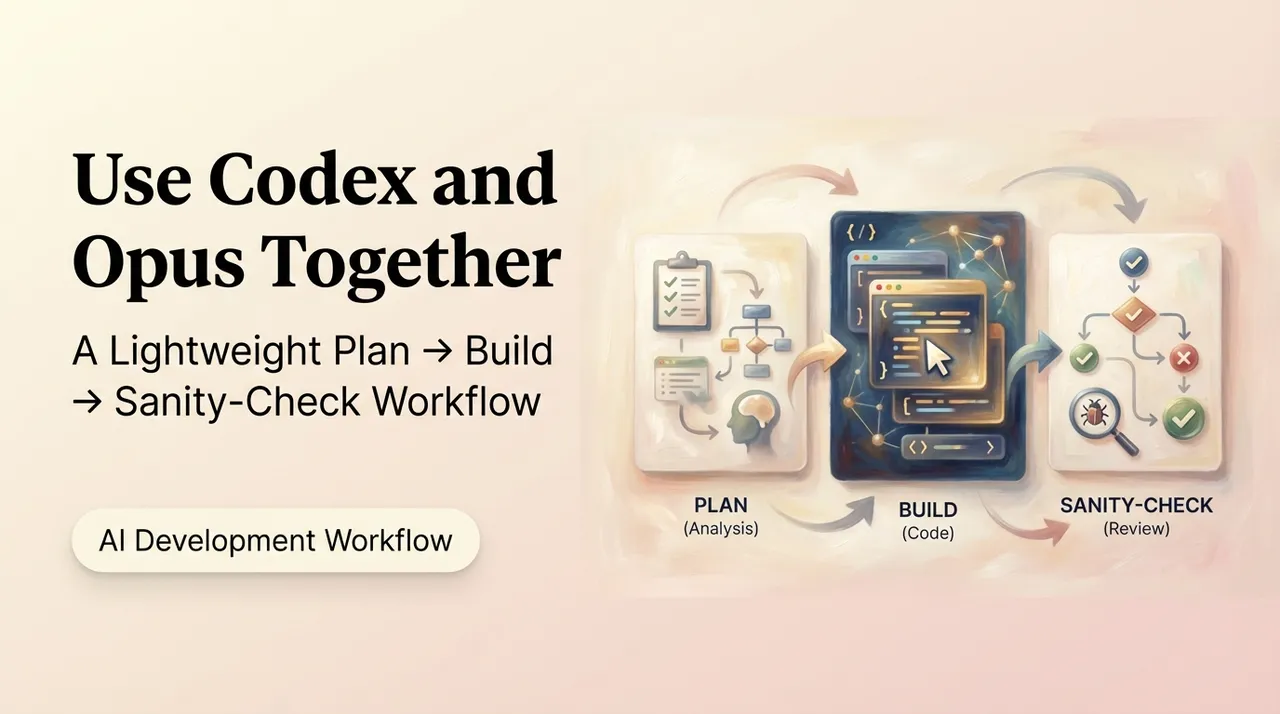

Codex as an Agent, Not a Chatbot

First, let's be clear about what "agent-style" actually means when we're talking about GPT-5.3 Codex.

It's not just a faster GPT-5.2-Codex. According to OpenAI's February 5, 2026 release, GPT-5.3 Codex combines frontier coding performance with reasoning capabilities and runs 25% faster. But the real shift is architectural: it's designed for long-running tasks involving research, tool execution, and iterative refinement—while letting you steer mid-task without losing context.

That last part is critical. In traditional chat interfaces, interrupting means starting over. In agent mode, you can course-correct ("wait, use the v2 API instead") without breaking the task thread.

What This Means in Practice

When I say "agent," I mean a system that:

- Plans a task graph before writing code

- Executes terminal commands and reads outputs

- Revises based on test failures or lint errors

- Maintains context across 100+ interactions

- Reports progress without constant prompting

Here's a real example from my testing:

Task: Refactor a legacy authentication module to use JWT tokens instead of session cookies, update all 12 affected endpoints, and ensure tests pass.

Traditional approach: I'd write detailed prompts for each step, copy/paste code between terminal and editor, manually verify each change.

Agent approach: I gave GPT-5.3 Codex the spec, and it:

- Generated a task plan with 8 steps

- Created failing tests first (TDD-style)

- Refactored endpoints one at a time

- Ran the test suite after each change

- Fixed lint errors automatically

- Proposed a final PR with a rationale

The difference? I supervised the process but didn't micromanage every function signature.

Common Agent-Style Workflows

Based on three weeks of real use, here are the workflows where agent mode actually delivers value—and where it doesn't.

Repo-Level Refactors

What works: Multi-file changes where the agent needs to maintain consistency across modules.

I tested this with a Python codebase migration from Flask 2.x to Flask 3.x. The task involved:

- Updating import statements across 23 files

- Replacing deprecated decorators

- Adjusting error handling patterns

- Ensuring the test suite stayed green

GPT-5.3 Codex handled this better than I expected. According to OpenAI's benchmark data, it achieves 56.8% on SWE-Bench Pro (spanning four programming languages) and uses fewer tokens than previous models.

But here's what the benchmarks don't tell you: the agent will make confident architectural choices based on patterns it infers from your codebase. Sometimes those choices are brilliant. Sometimes they're subtly wrong in ways that won't surface until production.

Example: During the Flask migration, Codex decided to consolidate error handlers into a single decorator pattern. Smart move—except it assumed all endpoints needed identical error responses. I caught this during code review, but if you're not actively supervising, these "helpful improvements" can drift your architecture.

Based on 40+ tasks across Python, TypeScript, and Rust codebases

Tool + Terminal Execution

What works: Tasks requiring iterative terminal feedback—build systems, test automation, deployment scripts.

GPT-5.3 Codex scored 77.3% on Terminal-Bench 2.0, a 13-point jump over GPT-5.2-Codex. That's a significant gain, and I felt it during testing.

Real scenario: I asked Codex to diagnose why a Docker build was failing in CI but passing locally.

The agent:

- Examined the Dockerfile

- Compared local and CI environment variables

- Ran test builds with verbose logging

- Identified a missing

.dockerignorefile causing context bloat - Fixed the issue and verified the build

The key moment was step 3. Instead of guessing, the agent ran actual commands and adjusted based on stderr output. This is where the 25% speed improvement really matters—faster iteration loops mean less time waiting between tool calls.

Code example from my testing:

# Task: Set up a pre-commit hook that runs tests only on changed files

# Agent-generated script

#!/usr/bin/env python3

import subprocess

import sys

def get_changed_files():

result = subprocess.run(

['git', 'diff', '--cached', '--name-only', '--diff-filter=ACM'],

capture_output=True, text=True

)

return [f for f in result.stdout.split('\n') if f.endswith('.py')]

def run_tests(files):

if not files:

print("No Python files changed, skipping tests")

return 0

test_files = [f.replace('src/', 'tests/test_') for f in files

if f.startswith('src/')]

if test_files:

result = subprocess.run(['pytest'] + test_files)

return result.returncode

return 0

if __name__ == '__main__':

changed = get_changed_files()

sys.exit(run_tests(changed))

Notice what Codex did here: it didn't just generate the script—it inferred the project structure convention (src/ maps to tests/test_) from the repo layout. This is the kind of contextual reasoning that makes agent mode useful.

When this breaks: Long-running build processes. If a task takes more than ~5 minutes, the agent sometimes "completes" prematurely. I saw this with a Rust project where compilation took 8 minutes—Codex marked the task as done after the compilation started but before linking finished.

Guardrails Developers Usually Set

This is where my testing got more interesting—and more frustrating.

GPT-5.3 Codex is OpenAI's first model classified as "High capability" for cybersecurity under their Preparedness Framework. OpenAI deployed what they call their "most comprehensive cybersecurity safety stack to date," including safety training, automated monitoring, and trusted access controls.

But those are OpenAI's guardrails. What about yours?

Security Boundaries

Command allowlists/denylists: I learned this the hard way. During a database migration task, Codex attempted to run DROP DATABASE as part of a "cleanup step" it inferred from a partially written migration script.

Here's what I now enforce:

# config.toml for Codex CLI

[guardrails]

allowed_commands = [

"git",

"pytest",

"npm",

"cargo",

"docker build",

"docker run --rm"

]

denied_commands = [

"rm -rf",

"DROP DATABASE",

"sudo",

"curl | bash"

]

require_approval = [

"git push",

"npm publish",

"pip install",

"apt-get"

]

File path restrictions: Codex should only edit files in specific directories:

# Prompt contract example

SYSTEM_PROMPT = """

You are a coding agent with restricted file access.

Allowed paths:

- ./src/* (read/write)

- ./tests/* (read/write)

- ./docs/* (read only)

Forbidden paths:

- ./config/* (contains secrets)

- ./migrations/* (requires human review)

- Any file outside the project root

Before editing any file, confirm it's in an allowed path.

"""

Quality Gates

The 77.3% Terminal-Bench score sounds impressive, but benchmark performance doesn't guarantee production-ready code. Here's what I enforce:

Real example: I set a max diff size of 300 lines. When Codex tried to refactor an entire module in one pass, it was forced to break the work into three smaller PRs. Those smaller chunks were actually easier to review—and I caught two subtle bugs I would have missed in a 1000-line diff.

Context Management

GPT-5.3 Codex has a 400K token context window (same as GPT-5.2-Codex), but that doesn't mean you should use all of it.

I noticed degradation in code quality after ~250K tokens in a single conversation. The agent started repeating earlier solutions or forgetting constraints I'd set 50 interactions ago.

My workflow now:

- Start fresh threads for distinct features

- Export and reload context when switching between related tasks

- Keep "project knowledge" in a separate knowledge base file rather than relying on conversation history

When Agent-Style Use Backfires

Not everything belongs in agent mode. Here's what I stopped trying after repeated failures:

-

Creative Architecture Decisions

Task: "Design a caching layer for this API."

What happened: Codex proposed a Redis-based solution with sensible defaults. But when I asked it to consider cost trade-offs for a low-traffic service, it switched to an in-memory cache without considering persistence needs.

The problem: Agents optimize for the immediate prompt, not your business constraints. They don't know that "low traffic" doesn't mean "okay to lose cache on restart" for your use case.

Better approach: Use agent mode for implementation after you've made architectural decisions yourself.

-

Debugging Flaky Tests

Task: "Fix these intermittently failing integration tests."

What happened: Codex added retry logic and increased timeouts—classic flaky test bandaids. It didn't identify the root cause (a race condition in test setup).

The problem: Pattern-matching against common solutions works for common bugs. But truly flaky tests usually have weird, domain-specific causes.

Better approach: Use the agent for code exploration and hypothesis testing, but drive the debugging strategy yourself.

-

Performance Optimization

Task: "This endpoint is slow in production. Optimize it."

What happened: Codex added caching and database query optimizations based on the code structure. Performance improved in tests but got worse in production (cache misses exceeded cache hits due to data distribution patterns).

The problem: Optimization requires production data profiling. The agent can't see your metrics, traffic patterns, or actual bottlenecks.

Better approach: Profile first, then use the agent to implement specific optimizations you've identified.

At Macaron, we’ve been testing exactly this gap: the space between “I have an idea” and “this shipped safely.” Our focus isn’t on replacing engineering judgment, but on the coordination layer—breaking work into explicit steps, maintaining context across tool switches, and ensuring every agent action has a rollback path. If your workflows need to survive those “wait, that’s not what I meant” moments, try it with a real task and see where it holds—and where it doesn’t.

The Bottom Line

GPT-5.3 Codex as an agent is genuinely useful for:

- Multi-file refactors with clear acceptance criteria

- Terminal-heavy tasks requiring iterative feedback

- Test automation and CI/CD script generation

- Repo exploration and documentation

It's not ready for:

- Unsupervised production deployments

- Architectural decisions without human direction

- Performance optimization without profiling data

- Creative problem-solving on novel bugs

The 25% speed improvement is real. The 77.3% Terminal-Bench score reflects genuine capability gains. But the gap between benchmarks and production use is still wide enough that supervision, guardrails, and clear rollback strategies aren't optional—they're the whole point.

If you treat it like a junior engineer who needs code review, it's powerful. If you treat it like an autonomous system, you'll spend more time fixing its mistakes than you saved by delegating.