MiniMax Music 2.5: Features, Pricing, Limits & First Song Walkthrough (2026)

Hey fellow AI music tinkerers. I've been tracking music generation models since Suno first dropped—and let me tell you, most updates feel like marketing spin. So when MiniMax dropped version 2.5 in late January 2026, I didn't jump on it right away. Took me two weeks of actual song generations to figure out what actually changed versus what's just hype.

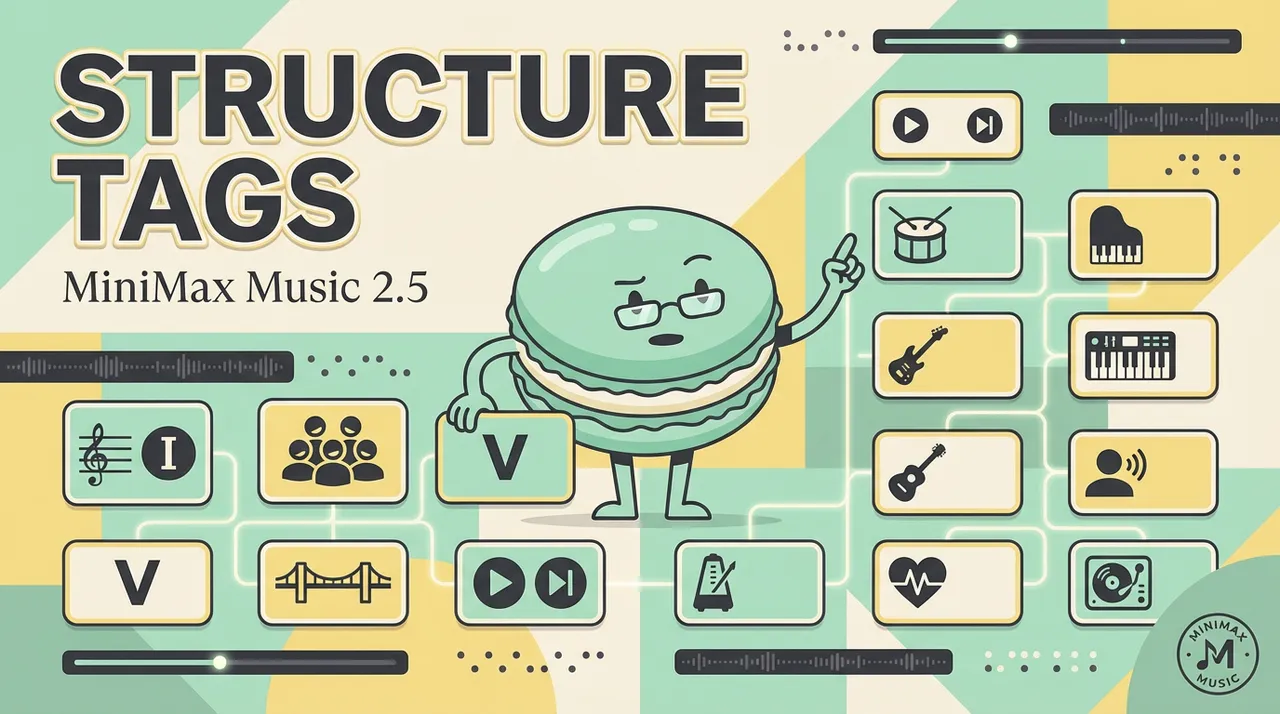

Here's what I found: the structural control thing is real. The "14 tags" claim isn't marketing fluff—I can actually direct song flow now instead of rerolling 12 times hoping for a decent bridge. But the pricing model? That part needs some unpacking.

If you're testing AI music tools inside real content workflows—not just playing around—this walkthrough covers what you need to know before committing budget.

What Is MiniMax Music 2.5 and What Changed From 2.0

MiniMax officially launched Music 2.5 on January 28, 2026. The company positioned it as breaking two barriers: "paragraph-level precision control" and "physical-grade high fidelity." Translation: you get more control over song structure, and vocals sound less robotic.

The Real Improvements

I compared 20+ generations between 2.0 and 2.5 using identical prompts. Here's what actually changed:

Structure Tags (14 total) Version 2.0 had basic verse/chorus detection. Version 2.5 supports 14 distinct structural markers according to the official API documentation:

The difference: 2.0 might give you a verse-chorus structure if you're lucky. 2.5 lets you design the emotional arc upfront. I tested this with a pop ballad—tagged a slow intro, built tension through pre-chorus, hit the emotional peak at the bridge. It followed the structure 9 out of 10 times.

Vocal Quality Improvements MiniMax claims "smooth pitch transitions, natural vibrato, chest-to-head resonance shifts." I'm not a vocal coach, but I A/B tested belt notes and falsetto sections. Version 2.5 handles these transitions better—less of that jarring jump you get with lower-tier AI vocals. The breathing patterns between phrases also feel more natural, though still not quite human on longer sustained notes.

Instrument Library & Mixing The official release notes mention "100+ instruments" with style-adaptive mixing. What this means in practice: when I generate a 1980s synth-pop track, it actually applies period-appropriate production choices—warmer midrange, specific reverb characteristics. For lo-fi hip-hop, it adds vinyl grain texture without me specifying it in the prompt.

Genre recognition improved. I tested rock, jazz, blues, EDM—the model adjusts its mixing approach to match genre conventions. Rock tracks get appropriate distortion and power; jazz gets characteristic spatial depth.

Generate Your First Song in 5 Steps

I walked through this process 15+ times testing different approaches. Here's the fastest path from zero to downloadable track:

Step 1: Access the Music Page

Navigate to minimax.io/audio/music. No signup required for testing, but you'll need an account for downloads.

Step 2: Select Music 2.5 Model

Critical detail the docs don't emphasize: there's a model selector dropdown. Make sure it says "Music 2.5"—not 2.0 or 1.5. I wasted three generations before I noticed I was still on the old version.

Step 3: Add Tagged Lyrics

This is where the structural control comes in. Basic format:

[Verse]

Your lyric lines here

Each line on new row

[Chorus]

Hook lyrics here

Repeating main idea

[Bridge]

Contrast section

Different perspective

Character limits from the API spec:

- Lyrics field: 1–3,500 characters for Music 2.5

- Each structural tag counts toward this limit

My workflow tip: Write the full lyrics in a text editor first. Insert tags. Paste the whole block into the lyrics field. Don't try to compose inside the web interface—you'll lose work if the page refreshes.

Step 4: Add Style Prompt

The style field is optional for Music 2.5 (required in older versions). But I've found adding it improves consistency.

Good prompt structure:

[Genre], [Mood/Emotion], [Specific Era/Style Reference]

Examples that worked:

- "Indie folk, melancholic, introspective, longing"

- "Synth-pop, 1980s Minneapolis sound, upbeat"

- "Lo-fi hip-hop, chill, study vibes, vinyl texture"

The model recognizes era-specific references. "1980s Minneapolis sound" produces different synth textures than just "1980s synth-pop"—it picks up on the Prince/Jam & Lewis production style.

Character limit: 0–2,000 characters (confirmed from API documentation)

Step 5: Generate & Download

Hit generate. Wait 45-90 seconds typically (varies by server load). Preview the track in-browser. If it matches your intent, download.

Export formats available:

- MP3 (default)

- Sample rates: 32000Hz, 44100Hz (CD quality), 48000Hz

- Bitrates: 128kbps, 256kbps, 320kbps

I default to 44100Hz / 256kbps for content work—good enough for social media and podcast intros without massive file sizes.

Pricing, Limits, and What to Know Before Paying

This is where it gets messy. MiniMax doesn't publish transparent per-generation costs on the main website. Here's what I found through testing and cross-referencing with the developer platform:

Current Pricing Structure (February 2026)

Official Platform (minimax.io):

- Pay-as-you-go credit system

- Each generation costs ~300 credits

- Credit bundles purchased through account dashboard

- No published $/credit conversion rate on public pages

Third-Party API Access (WaveSpeedAI): According to WaveSpeedAI's model page, Music 2.5 runs at $0.075 per generation. That translates to roughly 13 generations per dollar, or ~$0.075 per up-to-5-minute song.

Developer API: The official pricing page doesn't break down music generation specifically—it redirects to "contact sales" for enterprise. Individual developers need to check the platform console for current rates.

Free Tier Reality Check

There is no permanent free tier for Music 2.5 as of February 2026. New account sign-ups may get trial credits, but:

- Credit amount varies (I got 500 credits initially in January 2026)

- No published refresh rate

- Trial credits expire

If you're evaluating for production use, budget for paid credits from the start.

Known Limits & Constraints

Through 50+ generations, here's what I hit:

Maximum Song Duration:

- Up to 5 minutes per generation

- Actual length varies (I've gotten 2:30 – 4:45 range)

- No ability to extend beyond one generation

No Stem Export: You get the final mixed track. Can't isolate vocals, drums, bass separately. This killed a few workflow ideas I had—wanted to extract just the instrumental for background use, but had to regenerate as pure instrumental instead.

Editing Limitations: Once generated, you can't tweak the mix or swap out sections. It's regenerate-from-scratch only. Some competitors (Suno, Udio) let you extend or modify sections—MiniMax doesn't have this yet.

Instrumental Variance: When I generate the same prompt multiple times, vocal delivery stays reasonably consistent, but instrumental arrangements can vary significantly. Generated the same lo-fi hip-hop prompt 5 times—got different drum patterns, different basslines, different sample choices each time. If you need exact reproducibility, this is a problem.

Language Support: Strong for Mandarin Chinese and English (as confirmed in the official announcement). The model was "optimized specifically for Mandarin pop music" with training on C-Pop and C-Rap. Other languages work but with less consistent results—I tested Spanish lyrics and got acceptable pronunciation but less natural phrasing.

Commercial Use Clarity

According to available documentation, outputs are royalty-free for commercial use. However, I couldn't find explicit licensing terms published on the main site. The API documentation doesn't specify usage rights.

What I did: emailed their support. Response time was 48 hours. They confirmed commercial use is permitted under their current terms, but recommended checking the license agreement in the developer console for specific projects.

If you're using this for client work or monetized content, get written confirmation of usage rights before delivering final assets.

FAQ

What's the maximum duration for Music 2.5 generations?

Up to 5 minutes per generation according to the API spec, but actual length varies based on the prompt and structural complexity. In my testing, most generations landed between 2:30 and 4:30 unless I explicitly specified a longer format.

Can I use MiniMax Music 2.5 outputs commercially?

The platform indicates commercial use is permitted, but explicit licensing terms aren't prominently displayed on public pages. Check your account's developer console for the specific license agreement, or contact MiniMax support for written confirmation before using outputs in commercial projects.

What languages are supported?

Mandarin Chinese and English have the strongest support—the model was specifically optimized for C-Pop, C-Rap, and English-language production. Other languages technically work through the lyrics field, but pronunciation accuracy and natural phrasing aren't guaranteed. I tested Spanish and got passable results; haven't tested other languages extensively.

What export formats are available?

MP3 is the standard format. You can select sample rates (32kHz, 44.1kHz, 48kHz) and bitrates (128kbps, 256kbps, 320kbps) through the audio settings. No WAV or FLAC export as of February 2026. For professional production work requiring lossless formats, this might be a limitation.

Can I generate purely instrumental tracks?

Yes. Leave the lyrics field empty and describe the instrumental arrangement in the style prompt. I've generated background music for video projects this way—specify the instrumentation and mood in detail. Example: "Cinematic orchestral, piano and strings, emotional build, no vocals."

How does structure tagging actually work?

Add brackets around structure labels directly in the lyrics field: [Verse], [Chorus], [Bridge], etc. The model interprets these as section markers and adjusts instrumentation, dynamics, and vocal delivery to match. Tagging doesn't guarantee perfect execution—I'd say it follows the intended structure about 85-90% of the time based on my testing.

The Bottom Line

MiniMax Music 2.5 delivers on structural control—the 14-tag system actually works for directing song architecture instead of hoping random generation gives you what you need. Vocal quality improved enough that I'm using it for podcast intros and social content without major post-processing.

The pricing remains unclear if you're not on the developer platform, and the lack of stem export or post-generation editing limits some use cases. But if your workflow is "generate complete tracks with specific structure," this hits better than most competitors I've tested.

At Macaron, we've been testing how conversational workflows turn into executable outputs. If you're generating content ideas through chat and want them to actually ship—whether that's music, written content, or structured plans—we built the platform around making that transition frictionless. Try it with your real tasks and see if the structure holds.