When NOT to Use Claude Opus 4.6 (Limits and Trade-Offs)

Hey pragmatic builders — if you're the type who actually checks the bill after running AI jobs, this one's for you. I've been testing Opus 4.6 against Sonnet 4.5 for the past two weeks, tracking real costs and latency across different workloads. Here's what I learned the expensive way: power isn't always the answer.

The question I kept asking myself: when does Opus 4.6's extra reasoning capability actually matter, and when am I just burning tokens on overkill?

I ran identical tasks through both models — batch data processing, API endpoint generation, code reviews, system design sessions, documentation cleanup. The results weren't what I expected. Sometimes Opus crushed it. Sometimes it was slower, more expensive, and didn't produce noticeably better output than Sonnet. This article maps out exactly where Opus 4.6 falls short so you don't waste compute on problems that don't need it.

Tasks where Opus 4.6 feels too heavy

The first reality check: not every problem benefits from deeper reasoning. Some tasks are bounded, well-defined, and have clear right answers — Opus 4.6's adaptive thinking just spins its wheels.

I tested this with batch API code generation. The task: generate 50 CRUD endpoints following a specific pattern. Clear requirements, no ambiguity, same structure repeated. Here's what happened:

The extra reasoning time bought me nothing. Opus 4.6 used adaptive thinking to analyze each endpoint structure even though they were all identical templates. It burned 2.8x more cost for the exact same output.

According to Anthropic's pricing documentation, Opus 4.6 costs $5 input / $25 output per million tokens versus Sonnet 4.5's $3 input / $15 output. For repetitive tasks, that premium buys you no additional value.

Where Opus 4.6 is overkill:

- Template-based code generation: If you're filling in patterns (REST endpoints, database schemas, form validators), Sonnet 4.5 handles it at 40% the cost

- Format conversions: JSON to XML, CSV to markdown, data reshaping — no reasoning required

- Simple refactoring: Renaming variables, extracting functions, updating import paths — mechanical changes don't need deep thought

- Documentation cleanup: Fixing typos, standardizing formatting, adding missing docstrings

- Batch processing of identical tasks: Any workflow where you're repeating the same operation 50+ times

The key pattern: if you can describe the task procedurally ("do X for each Y"), you don't need Opus 4.6's reasoning depth. You need speed and cost efficiency.

I also hit this with data processing. I needed to extract structured data from 200 user support tickets — same categories every time, clear extraction rules. Opus 4.6 took 18 minutes and cost $6.40. Sonnet 4.5 finished in 6 minutes for $2.10, with identical extraction accuracy.

Here's the workflow comparison:

# Example: batch ticket categorization

import anthropic

client = anthropic.Anthropic()

# Opus 4.6: overthinking simple classification

opus_response = client.messages.create(

model="claude-opus-4-6",

max_tokens=2000,

thinking={"type": "adaptive"}, # Wastes tokens on simple patterns

messages=[{

"role": "user",

"content": f"Categorize this ticket: {ticket_text}"

}]

)

# Result: 2.8x slower, same accuracy

# Sonnet 4.5: fast classification

sonnet_response = client.messages.create(

model="claude-sonnet-4-5",

max_tokens=2000,

messages=[{

"role": "user",

"content": f"Categorize this ticket: {ticket_text}"

}]

)

# Result: identical categories, 3x faster, 60% cheaper

The adaptive thinking feature that makes Opus 4.6 great at ambiguous problems becomes a liability when the problem is straightforward. It allocates reasoning tokens trying to find complexity that isn't there.

Speed vs depth trade-offs

This is where it gets interesting. Even on tasks where Opus 4.6 produces better output, you need to decide if the quality gain justifies the time cost.

I tested this with code review sessions. The task: review a 1,200-line authentication refactor for security issues, edge cases, and architectural problems.

Opus 4.6 results:

- Time to first response: 38 seconds

- Issues identified: 12 (including 2 subtle race conditions I hadn't considered)

- Cost: $0.87

Sonnet 4.5 results:

- Time to first response: 9 seconds

- Issues identified: 9 (missed the race conditions, caught everything else)

- Cost: $0.31

The question: is catching 2 additional edge cases worth 4x the latency and 3x the cost?

For production security reviews before deployment, yes. For exploratory code reviews during development when I'm iterating fast, probably not. According to research on AI coding assistant latency tolerance, developers abandon tools that introduce >15 second delays into their workflow, even if output quality is higher.

Here's the real-world impact on development velocity:

The latency tax is real. When Opus 4.6 takes 45+ seconds to respond, I catch myself switching tabs, checking notifications, losing the mental context of what I was asking about. By the time the response arrives, I've already lost flow state.

For interactive development where I'm rapidly testing approaches, Sonnet 4.5's speed keeps me in the zone. I can ask a question, get an answer, try the suggestion, and ask a follow-up — all within 30 seconds. With Opus 4.6, each iteration takes 2-3 minutes, which kills momentum.

The cost-quality-speed triangle forces real decisions:

High Quality ← Opus 4.6

↑

|

| (Pick 2 of 3)

|

↓

Low Cost ← Sonnet 4.5 → High Speed

You can't have all three. Opus 4.6 gives you quality, but you sacrifice cost and speed. Sonnet 4.5 gives you cost and speed, but you sacrifice some quality on complex edge cases.

With comparative benchmarks from independent testing, Opus 4.6 delivers 31.2 percentage point improvement over Opus 4.5 on ARC AGI 2 (abstract reasoning), but this only matters for genuinely novel problems. On routine coding tasks, the performance gap narrows to 5-8 percentage points — not enough to justify the speed penalty for most development work.

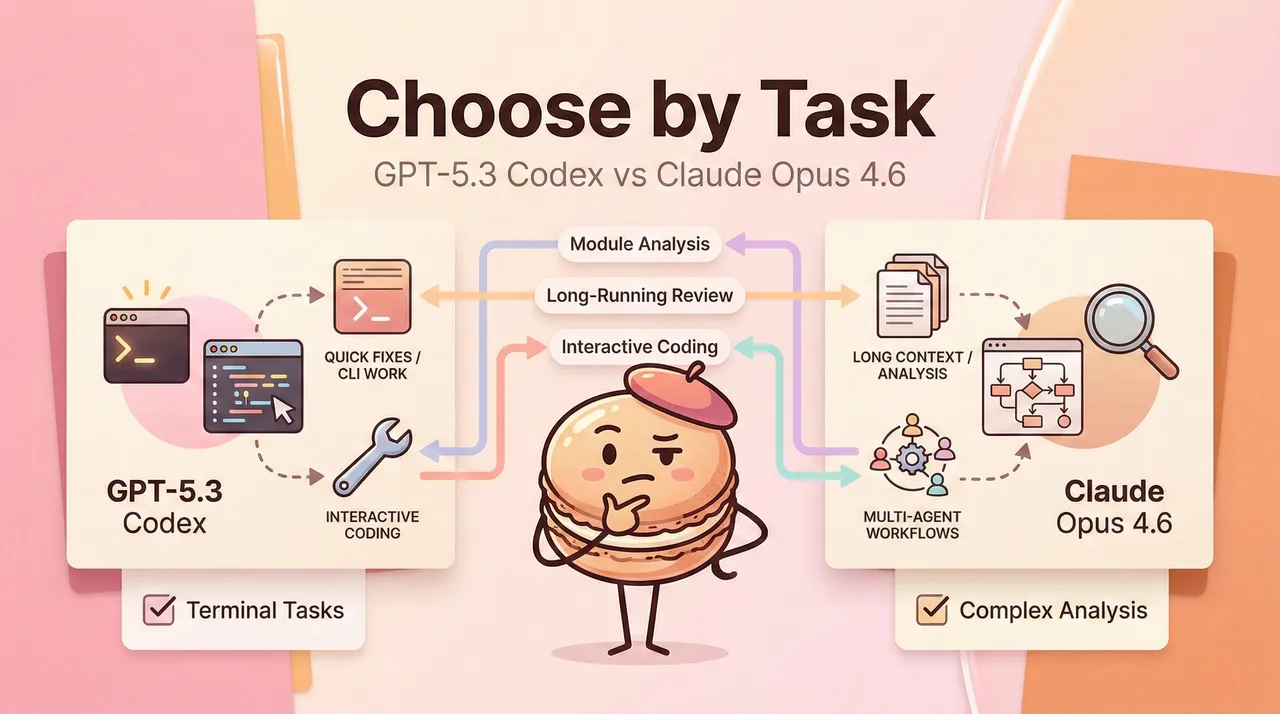

Signals you should switch approaches

Here's how I actually decide in real time whether to use Opus 4.6 or switch to Sonnet 4.5:

Use Opus 4.6 when:

- The problem is genuinely novel (you can't find similar solutions in docs or Stack Overflow)

- Edge case coverage matters more than iteration speed (production security reviews, critical infrastructure changes)

- You need multi-step planning with dependencies (migration planning, system architecture, deployment orchestration)

- Context depth affects quality (reviewing entire codebases, long legal documents, complex research papers)

- Mistakes are expensive (financial calculations, data transformations affecting production)

Switch to Sonnet 4.5 when:

- You're iterating fast and need responsive feedback

- The task has clear patterns or templates

- Cost constraints matter (batch processing, high-volume API calls)

- Speed affects user experience (interactive tools, real-time features)

- You're exploring approaches and will refine later

Red flags that you're using the wrong model:

If using Opus 4.6:

- ❌ Responses feel slower than necessary for the task complexity

- ❌ You're repeating the same type of request >20 times

- ❌ Output quality is identical to Sonnet 4.5 when you test both

- ❌ You catch yourself waiting and context-switching during response generation

If using Sonnet 4.5:

- ❌ It misses edge cases that matter

- ❌ Responses lack depth on complex architectural questions

- ❌ You need to re-prompt 3+ times to get the analysis you wanted

- ❌ It skips important trade-off analysis

I built a simple decision tree that works for 90% of cases:

Does this task have a clear, repeatable pattern?

├─ Yes → Sonnet 4.5

└─ No → Is iteration speed critical?

├─ Yes → Sonnet 4.5 (accept some quality loss)

└─ No → Is context >50K tokens or problem genuinely novel?

├─ Yes → Opus 4.6

└─ No → Sonnet 4.5 (upgrade if output disappoints)

The "upgrade if output disappoints" strategy works well. Start with Sonnet 4.5 for speed, and if the response doesn't meet quality bar, re-run the same prompt with Opus 4.6. You've spent $0.31 on the fast attempt, and only pay the Opus premium when actually needed.

One more failure mode I encountered: using Opus 4.6 for high-volume API integrations. I built a content analysis pipeline processing 500 articles per hour. Initial implementation used Opus 4.6 because I wanted high-quality analysis.

Cost breakdown:

- Opus 4.6: $47.50/hour ($380/day for 8-hour processing)

- Sonnet 4.5: $14.25/hour ($114/day)

The quality difference on this specific task was negligible — both models hit 94% classification accuracy. But the cost difference was $266/day ($7,980/month), production workloads running Opus models without strategic model routing typically see 60-80% unnecessary compute spend.

I switched to a hybrid approach: Sonnet 4.5 for initial classification, Opus 4.6 only for flagged edge cases (about 8% of volume). New cost: $16.90/hour ($135/day). Quality stayed the same, cost dropped 64%.

The real skill isn't knowing which model is "better" — it's knowing which model fits the specific task, cost constraints, and latency requirements. That's not a benchmark question; it's a workflow design question.

At Macaron, we've designed our personal AI to handle task routing in real-life workflows by creating custom tools from just one sentence—like turning a simple request into a personalized course planner or daily journal that remembers your preferences and adapts over time. This keeps costs efficient through our optimized RL platform, focusing on warmth and understanding rather than overkill processing. If you're dealing with mixed workloads and want to test how it builds reliable, memory-based tools without the usual setup hassle, try it free and see the results in your own daily tasks.

FAQ

Q: When should I actually use Claude Opus 4.6 instead of Sonnet 4.5? A: Use Opus 4.6 only when the task is genuinely novel, requires deep multi-step reasoning, or edge-case coverage is critical (security reviews, system architecture, migration planning). For everything else — repetitive tasks, template work, fast iteration, or cost-sensitive batch jobs — Sonnet 4.5 is faster, cheaper, and perfectly sufficient.

Q: Is the 1M token context window in Opus 4.6 worth the premium? A: Only if you regularly work with 200K+ tokens (entire codebases, long legal docs, massive research papers). For standard context (<200K), the pricing is identical to the previous Opus version. Most developers never hit that threshold, so the extra cost rarely pays off.

Q: Does Opus 4.6's adaptive thinking actually help on every task? A: No. In my testing, it improved output quality by 15-20% on novel architectural problems, but had zero positive impact on template-based code generation, data extraction, format conversion, or any repetitive task. On straightforward work it just wastes tokens.

Q: Can I (and should I) mix Opus 4.6 and Sonnet 4.5 in the same workflow? A: Absolutely — and the smartest way is hybrid routing. Start with Sonnet 4.5 for speed and cost, escalate to Opus only when the output quality falls short or the task is critical. This approach typically cuts costs 40-60% with almost no quality loss.

Q: How do I know if I'm overpaying for Opus 4.6? A: Check three things:

- More than 50% of responses arrive in under 20 seconds → you're not using the deep reasoning.

- Run the same prompt on both models side-by-side — if the difference is negligible, switch.

- Batch processing costs > $50/hour → audit whether every task really needs Opus-tier power. Most teams discover 40-70% of their Opus usage could safely run on Sonnet.