GLM-5 vs DeepSeek vs GPT-5 for Personal AI

A small thing pushed me into this: I asked my AI to help plan a friend's birthday dinner that required booking three restaurants, checking everyone's dietary restrictions, and making a backup plan. It forgot the dietary restrictions halfway through. Again.

That was last week. This week, GLM-5 dropped, GPT-5.2 got an update, and DeepSeek expanded its context window to over 1 million tokens. I didn't set out to become a model comparison person, but here I am, testing three frontier models against the same messy, multi-step personal tasks that keep breaking.

What to compare for personal AI

I'm not benchmarking these on math olympiad problems. I care about whether they can handle the unglamorous reality of personal AI: remembering what I said four exchanges ago, maintaining consistency across a 30-minute planning session, and not hallucinating details when I ask it to coordinate something with multiple moving parts.

The tests I ran:

- Planning a week-long trip with budget constraints and changing availability

- Maintaining context across a long journaling session where I kept circling back to earlier themes

- Triaging 47 unread emails and sorting them by urgency without losing nuance

These aren't impressive tasks. They're just the ones that matter when AI stops being a party trick and starts being something you rely on daily.

Planning and multi-step reasoning

GPT-5.2 approached the trip planning like a diligent assistant who'd had too much coffee. It laid out options, cross-referenced my budget constraints, and even flagged a scheduling conflict I hadn't noticed. The routing system that automatically switches between GPT-5.2 Instant and GPT-5.2 Thinking worked better than I expected—when the task got complex, I could feel it shift gears without me asking. What I didn't expect: the subtle hesitation before complex reasoning kicks in. It's maybe two seconds, but you notice it. Not frustrating, just... present.

GLM-5 took a different approach, it was trained specifically for "agentic intelligence with autonomous planning," and I noticed it. When I gave it the trip planning task, it broke down the problem into discrete steps and actually remembered all of them. I fed it a notes file with scattered thoughts about transportation, lodging preferences, and budget limits across 15 disconnected paragraphs. It pulled the relevant pieces and built a coherent plan without asking me to restructure anything.

The 200K context window meant I could dump my entire messy notes file and it didn't lose track. What caught me off guard: it cost roughly $0.11 per million input tokens compared to GPT-5.2's $1.25, as reported by Digital Applied. That's a 16x difference for similar capability. For a single trip planning session, this saved me maybe $0.003. For someone running personal AI tasks daily, it compounds.

DeepSeek V3.2, fresh off its February context expansion to 1M tokens as confirmed by the South China Morning Post, handled the entire planning session without context degradation. I tested this by feeding it progressively messier constraints—changing dates, updating budget, adding dietary restrictions—and it held. The Dynamically Sparse Attention mechanism seemed to help here, though I couldn't see the mechanism itself, just the results.

Conversational tone and empathy

Here's where things got interesting. GPT-5.2 Instant felt... warmer. OpenAI's documentation notes they specifically reduced sycophancy from 14.5% to under 6%, aiming for "less effusively agreeable" responses. In practice, this meant it pushed back gently when I was overcomplicating the trip itinerary, which was oddly refreshing.

GLM-5's tone was more utilitarian. It didn't try to be your friend, but it also didn't feel robotic. When I used it for creative journaling—a session where I kept circling back to process a complicated work situation—it maintained consistency without trying to offer unsolicited advice. For someone who just wants AI to be a quiet, reliable thinking partner, this worked.

DeepSeek surprised me by feeling the most "neutral present." It responded without adding emotional color, but also without feeling cold. During the email triage task, when I was trying to decide which messages needed immediate responses versus which could wait, it matched my energy without amplifying anxiety about the backlog.

Memory-dependent tasks

This is where the differences became stark.

I ran a test where I had a long conversation about organizing a small work project, then closed the session and came back two hours later to ask follow-up questions. GPT-5.2 maintained context within a session beautifully, but between sessions it started fresh. That's just how it works—each conversation is independent unless you explicitly reference earlier chats. This meant I had to either keep sessions open or spend time re-establishing context when I returned.

GLM-5 performed similarly within its session limits, though its 744B parameter MoE architecture with 40B active seemed to help maintain coherence across longer planning sessions. The model didn't degrade noticeably even when I was 90 minutes into a single conversation, which is longer than most personal AI tasks run but occasionally happens with complex planning.

DeepSeek's 1M token context meant I could keep adding to the same conversation thread without worrying about it forgetting earlier constraints. For tasks that genuinely span multiple days—like iterating on a personal project plan—this matters more than any benchmark score. I tested this by returning to a project planning thread three times over four days, each time adding new constraints or changing priorities. It held the full context without asking me to summarize where we'd left off.

Speed, cost & availability

Speed-wise, GPT-5.2 felt snappiest for quick back-and-forth. GLM-5's reported throughput of 17-19 tokens/second versus competitors' 25-30+ was noticeable in longer outputs but rarely frustrating.

Cost is where things got practical. My email triage task processed roughly 3,000 input tokens and generated another 800. On GPT-5.2: ~$0.004. On GLM-5: ~$0.0004. On DeepSeek V3: similar to GLM-5's pricing. For casual use, the difference is negligible. For daily personal AI tasks, it compounds.

Availability: GPT-5.2 is broadly accessible through ChatGPT Plus and API. GLM-5 launched February 11 via Z.ai's platform with an expected MIT-licensed open-weight release following Zhipu's tradition. DeepSeek V3.2 is available now via their API.

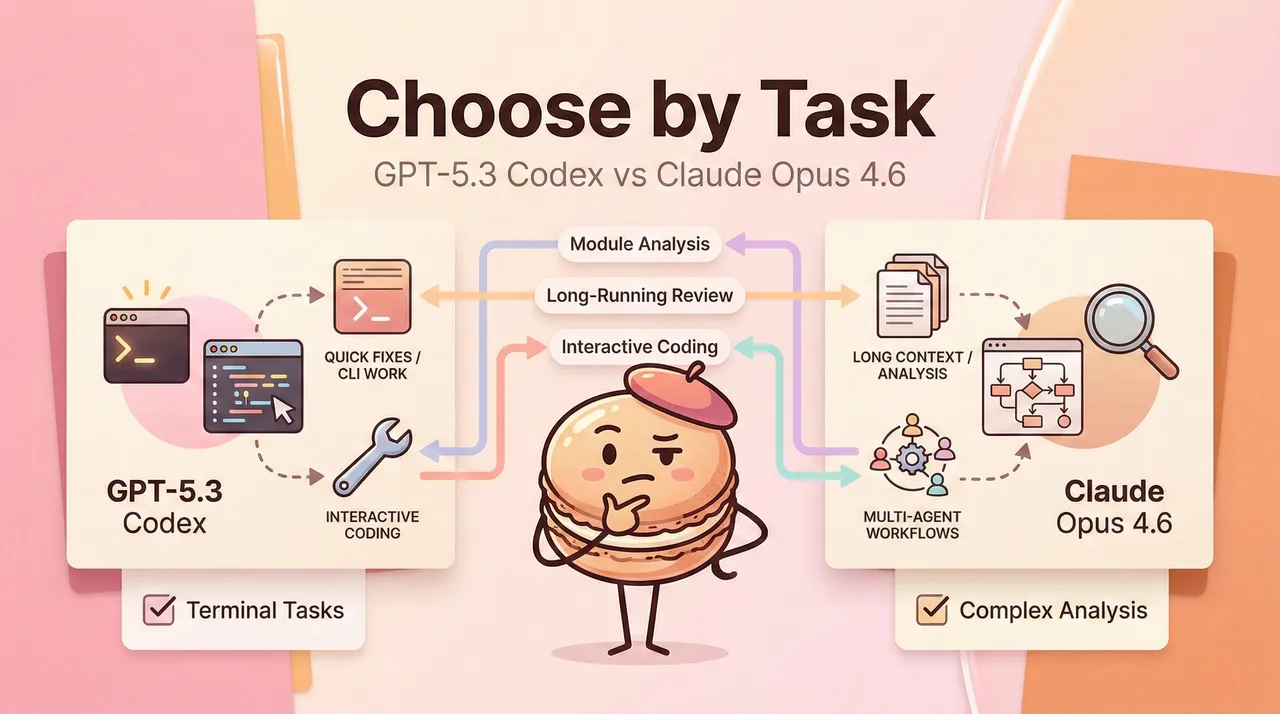

Best model by use case

Life planning → GLM-5

If you're coordinating something with many moving parts over multiple sessions—trip planning, event organization, project management—GLM-5's combination of strong agentic capabilities, 200K context, and cost efficiency makes the most sense. The tradeoff: slightly slower response times, though I rarely noticed this in practice.

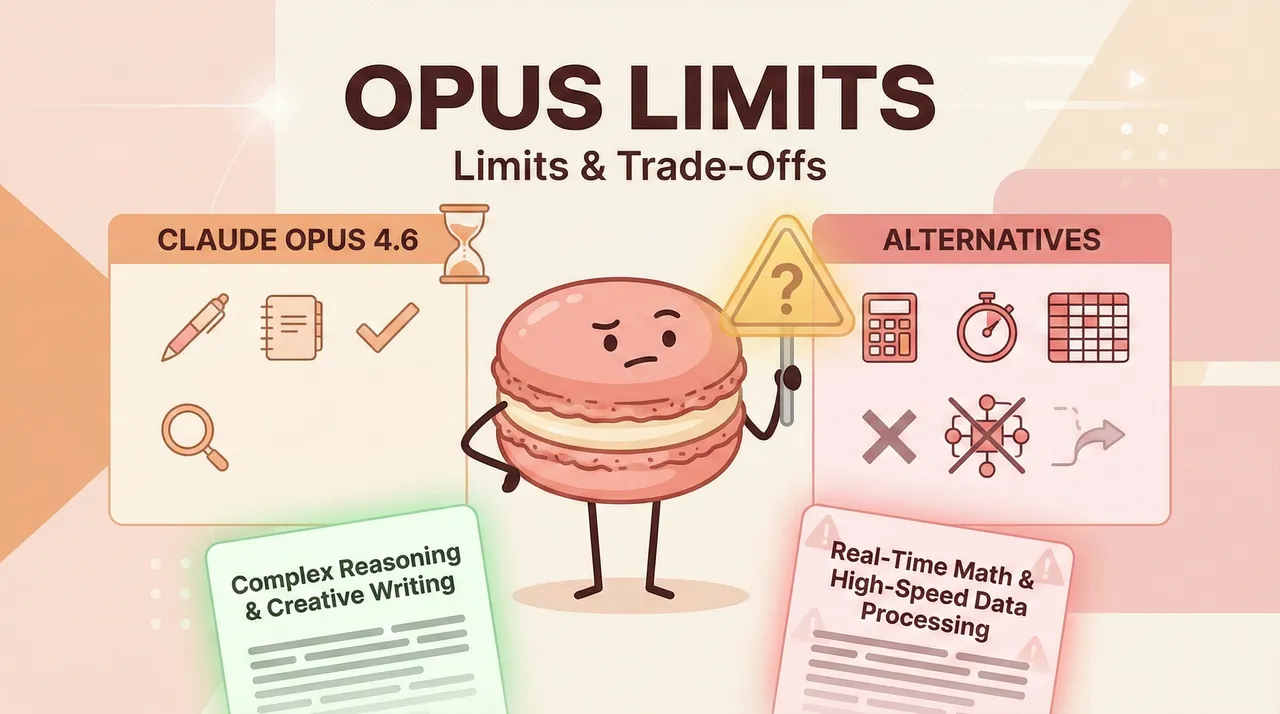

Creative journaling → DeepSeek V3.2

For open-ended reflection where you need the model to hold complex emotional threads across a long session, DeepSeek's 1M context window and neutral tone worked best. It didn't try to "help" when I just needed to think out loud, and it didn't lose track of themes I'd mentioned 30 minutes earlier.

Inbox triage → GPT-5.2

For quick, high-quality decisions that benefit from nuanced understanding of social dynamics and urgency hierarchies, GPT-5.2's speed and refined conversational capabilities won. The automatic switching between Instant and Thinking modes meant it could handle both straightforward sorting and complex judgment calls without me managing settings.

How Macaron lets you switch per task

This is where I should mention what I've been testing alongside these models. Our**** Macaron is a personal AI platform that routes tasks to different models based on what you're trying to accomplish. Instead of committing to one model for everything, you describe what you need, and it picks the right tool—GLM-5 for long-horizon planning, DeepSeek for context-heavy work, GPT-5.2 for quick conversational tasks.

I've been using it to avoid the exact problem that started this whole thing: choosing a model, hitting its limits, then manually copying context to a different one. The friction of switching models manually is subtle but real—you lose a few minutes reestablishing context, you miss nuances from the earlier conversation, and sometimes you just give up and accept a suboptimal result from whichever model you started with.

Try here now!

Macaron handles the switching and maintains continuity across model boundaries. It's still early, but for someone juggling different types of personal AI tasks, it removes friction I didn't realize was slowing me down. The first time I used it for a task that required both quick email responses and longer-form planning, I noticed I wasn't context-switching in my own head anymore. I just described what I needed and let the system figure out which model fit.

I'll keep using all three of these models. For now. And I'll see what happens the next time I ask AI to coordinate something complicated without losing track halfway through. How about you?