How to Use Claude Opus 4.6 for System Design and Architecture

Hey fellow system designers — if you're the type who stares at architecture diagrams wondering "will this actually survive production," stick around. I've been putting Opus 4.6 through real design work for the past three weeks, and something's different this time.

Here's what I wanted to know: Can this model actually help with the messy, ambiguous parts of system design? Not the "generate boilerplate code" stuff — the real work. The trade-off analysis. The "what breaks first under load" questions. The architectural decisions that haunt you at 2 AM.

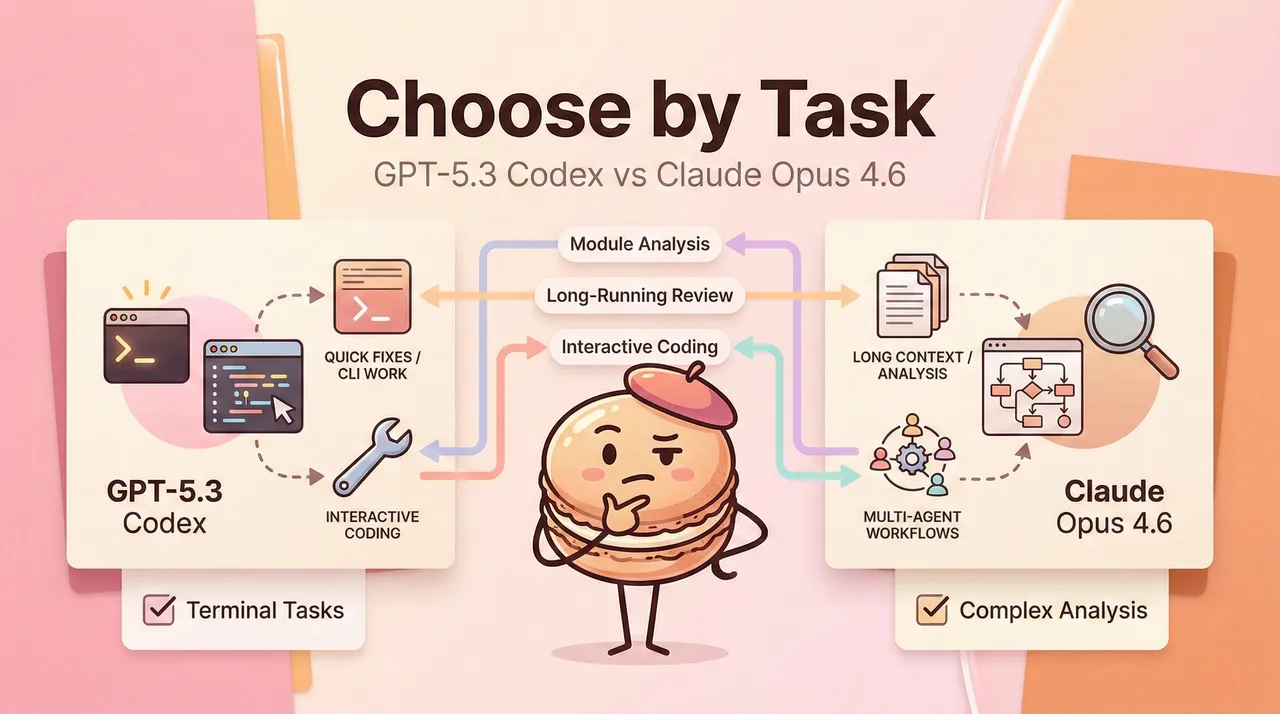

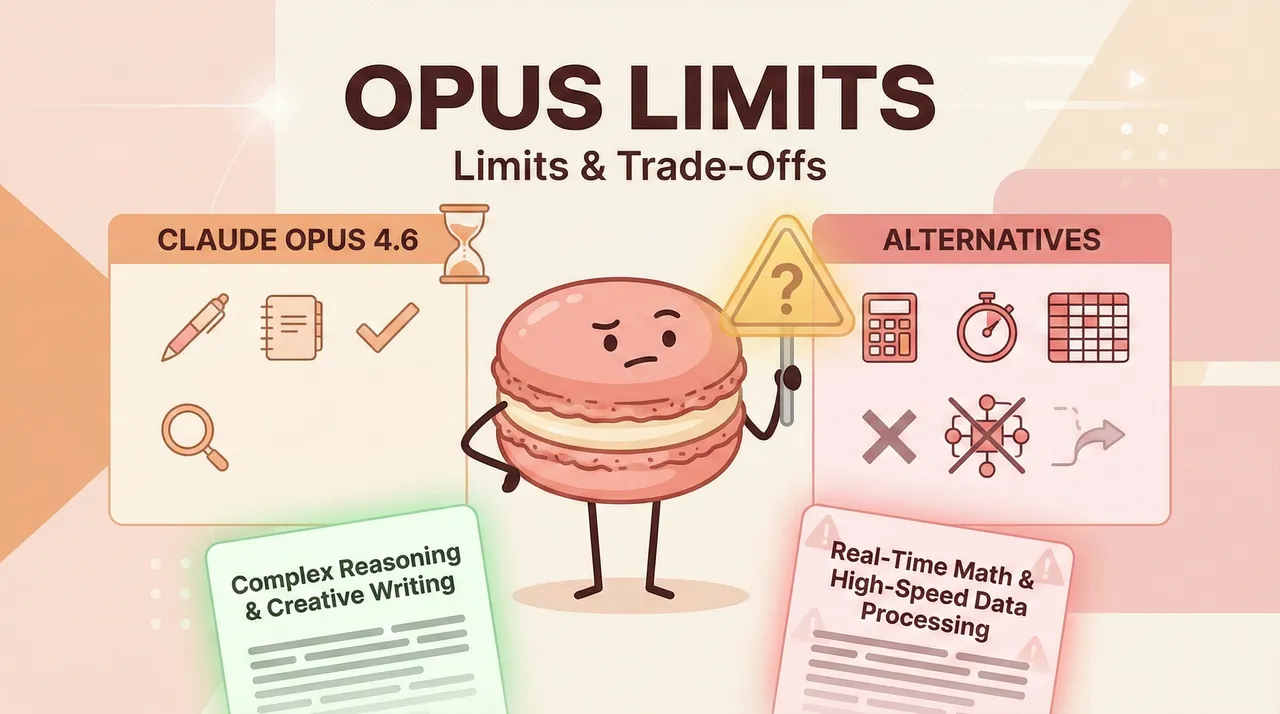

So I ran it through the scenarios that usually expose where AI falls flat: designing a distributed file deduplication system, refactoring legacy architectures, and planning multi-stage deployments. This article walks through what actually worked, what didn't, and how to extract useful architectural thinking from a model that cost $5 per million input tokens.

What makes Opus 4.6 good at architecture work

First thing I tested: does it actually understand system constraints, or just regurgitate patterns from training data?

According to Anthropic's official release, Opus 4.6 scored 65.4% on Terminal-Bench 2.0 (highest in the industry) and 72.7% on OSWorld for computer use tasks. Those numbers matter because they measure real multi-step reasoning, not just code completion.

But here's what I found more interesting in my own tests: the model doesn't jump straight to solutions. When I asked it to design a caching layer for a high-traffic API, it started by asking about my read/write ratio, data freshness requirements, and budget constraints. That's... not typical AI behavior.

The adaptive thinking feature is what changed my workflow. Instead of manually setting a "thinking budget," the model now decides when a problem needs deep reasoning. On simple refactoring tasks, it moves fast. On architectural decisions with safety implications, it slows down and thinks harder.

What I care about: it caught edge cases I didn't explicitly ask about. When designing the file deduplication system, it flagged that partial file corruption would break hash-based matching — then proposed chunked hashing with error correction. That's the kind of defensive thinking I need from a design partner.

Prompting for design thinking

Here's where most people waste Opus 4.6's capabilities: treating it like a code generator instead of a reasoning engine.

I stopped asking "build me X" and started asking "what breaks if we do X this way?" The difference in output quality was immediate.

Asking for assumptions

When I'm designing systems, I want to know what I'm implicitly assuming. Here's a prompt structure that worked:

# Example prompt for architectural assumption surfacing

prompt = """

I'm designing a real-time notification system that needs to handle:

- 100K concurrent WebSocket connections

- 5M events/minute peak load

- 99.95% delivery guarantee

- <200ms end-to-end latency

What assumptions am I making that could break this design?

List them by risk level and explain the failure mode for each.

"""

Opus 4.6's response included things I hadn't considered:

- Critical assumption: Network partitions won't last longer than connection timeout

- High risk: Message ordering guarantees under failure scenarios

- Medium risk: Client reconnection storm after mass disconnect

This is useful because it's organized by what fails rather than just listing technical details. Context-aware reasoning allocation — exactly what Opus 4.6's adaptive thinking provides — improves both efficiency and accuracy in complex problem-solving.

Surfacing trade-offs

The model's strength isn't picking "the right architecture" (there rarely is one). It's systematically walking through what you gain and lose with each approach.

I asked it to compare three database strategies for a high-write analytics pipeline:

# Comparative architecture prompt

prompt = """

Compare these three approaches for storing 50M events/day:

1. Append-only with periodic compaction (ClickHouse-style)

2. Time-series DB with downsampling (Prometheus-style)

3. Event streaming with materialized views (Kafka + Postgres)

For each: cost structure, failure modes, operational complexity,

and what scenarios each one breaks down in.

"""

What surprised me: instead of just describing each pattern, it organized the comparison around decision dimensions. It created a table showing which approach wins under different constraints:

This is how I actually make decisions — by understanding what I'm trading. The model nailed that.

Reviewing long reasoning outputs

Opus 4.6 can now output up to 128K tokens (doubled from 64K). That's enough for a complete system design document with architecture diagrams described in text, API contracts, deployment plans, and failure analysis.

The problem: long outputs get overwhelming fast. Here's how I structured review sessions to stay productive.

When the model generated a 15-page migration plan for moving a monolith to microservices, I didn't read it top-to-bottom. I asked follow-up questions that forced it to defend specific decisions:

# Example review prompt

followup = """

In your migration plan, you proposed moving the authentication service first.

Walk me through:

1. What breaks if auth goes down during migration?

2. How do you handle rollback with users mid-session?

3. What's your monitoring strategy for detecting authentication drift between old/new systems?

"""

The 128K output limit meant it could include the full reasoning chain without truncation. I could see why it chose the auth-first approach (stateless service, easy to dual-run) and what mitigations it planned for edge cases.

For code-heavy architectural decisions, I used the new structured outputs capability to enforce schema conformance:

import anthropic

client = anthropic.Anthropic()

response = client.messages.create(

model="claude-opus-4-6",

max_tokens=16000,

thinking={"type": "adaptive"},

messages=[{

"role": "user",

"content": "Design a distributed cache architecture. Return JSON with: topology, consistency_model, failure_scenarios, capacity_planning"

}],

response_format={

"type": "json_schema",

"json_schema": {

"name": "cache_architecture",

"strict": True,

"schema": {

"type": "object",

"properties": {

"topology": {"type": "string"},

"consistency_model": {"type": "string"},

"failure_scenarios": {"type": "array"},

"capacity_planning": {"type": "object"}

},

"required": ["topology", "consistency_model", "failure_scenarios"]

}

}

}

)

This guaranteed parseable output I could feed directly into documentation generators or architecture-as-code tools.

Turning analysis into decisions

The gap I usually see with AI models: great analysis, terrible decision-making. They list pros and cons but don't commit to a recommendation with clear reasoning.

Opus 4.6 improved here. When I asked it to recommend a deployment strategy for a high-stakes production rolloff, it didn't just describe options — it made a call and explained the decision criteria.

I tested this with a real scenario: migrating 200TB of user data between cloud providers with zero downtime allowed.

# Decision-forcing prompt

prompt = """

Given:

- 200TB PostgreSQL database

- Zero downtime requirement

- 3-month migration window

- $50K budget

Make a recommendation for the migration approach. Explain:

1. Your chosen strategy

2. Why you rejected alternatives

3. What could force you to change this decision mid-flight

4. Your contingency plan

"""

The response structure was exactly what I needed:

Recommendation: Dual-write with lag-based cutover

Why:

- Replication-based approaches (logical replication, CDC) risk data corruption at scale

- Snapshot-and-replay creates unacceptable catch-up time (est. 4-6 weeks lag)

- Dual-write lets you validate in production before cutover

Rejected alternatives:

- Blue-green deployment: Can't afford 2x infrastructure cost

- Read replica promotion: PostgreSQL cross-cloud replication lag too unpredictable

Decision trigger to abort:

- If dual-write introduces >5ms P99 latency, fall back to staged migration

- If lag exceeds 2 hours during load testing, switch to CDC-based approach

This is decision-ready output. I could take this to a planning meeting and defend it.

At Macaron, we handle exactly this kind of multi-session design work — your architectural conversations stay in memory, so when you pick up a migration plan three days later, the system already knows your constraints, rejected alternatives, and decision triggers. Test it with one real architecture decision and see if context persistence changes how you work.

The key insight: the model's adaptive thinking kicked in without me specifying it. It recognized this was a high-stakes decision and allocated more reasoning tokens to edge case analysis. This dynamic approach reduces both under-thinking (which causes missed edge cases) and over-thinking (which wastes compute on simple problems).

One more thing I noticed: the model maintains architectural consistency across long conversations. When I asked follow-up questions about monitoring strategy, it referenced decisions from earlier in the conversation without me restating context. The 1M token context window in beta means you can load an entire codebase and maintain coherence across hours-long design sessions.

That's how system design actually works — iterative, with decisions building on previous decisions. Opus 4.6 finally handles that workflow without context loss.